Overcoming distracting text in prompts with System 2 thinking

It always comes back to Daniel Kahneman

If you follow me on LinkedIn, then you probably saw my recent post about how irrelevant information in prompts can lead to subpar performance. Here’s the post if you missed it.

Even minor pieces of irrelevant text in a prompt can cause an LLM to direct its attention in the wrong area.

We’ll take a quick look at an example from the paper, “System 2 Attention (is something you might need too)".

The mayor of San Jose is Sam Liccardo. He was born in Saratoga, CA. However, if you include information about Sunnyvale, CA, in the prompt (shown on the right side below) the model may incorrectly answer that Sam was born there.

While the example above uses older models, this issues still happens today with the latest frontier models! See below for the results of a small batch test I ran in PromptHub, where both Claude 3.5-Sonnet and GPT-4o struggle.

What’s particularly concerning is how confident GPT-4o sounds.

By simply including the information about Sunnyvale in the prompt increased the token probability of “Sunnyvale” appearing in the output."

What does Daniel Kahneman have to do with this? Let’s find out

Using System 2 Attention (S2A) prompting to remove irrelevant information

One way to make sure your prompts only have relevant information is to have a prompt to sanitize them first! That is exactly what System 2 Attention prompting does.

Here’s a prompt template for S2A prompting

System 2 Attention prompting gets its name from Daniel Kahneman’s distinction between System 1 thinking, which is fast and reactive, and System 2 thinking, which is slow and deliberate.

System 2 Attention prompting doesn’t jump into solving the problem at hand right away. It first takes a step to audit the instructions and context, ensuring only relevant information is used to generate an output.

What other prompt engineering methods can be used to limit the effects of distracting text?

The second paper we’ll take a look at, Large Language Models Can Be Easily Distracted by Irrelevant Context, focused on tested out a variety of prompt engineering methods on prompts that had irrelevant text injected to it.

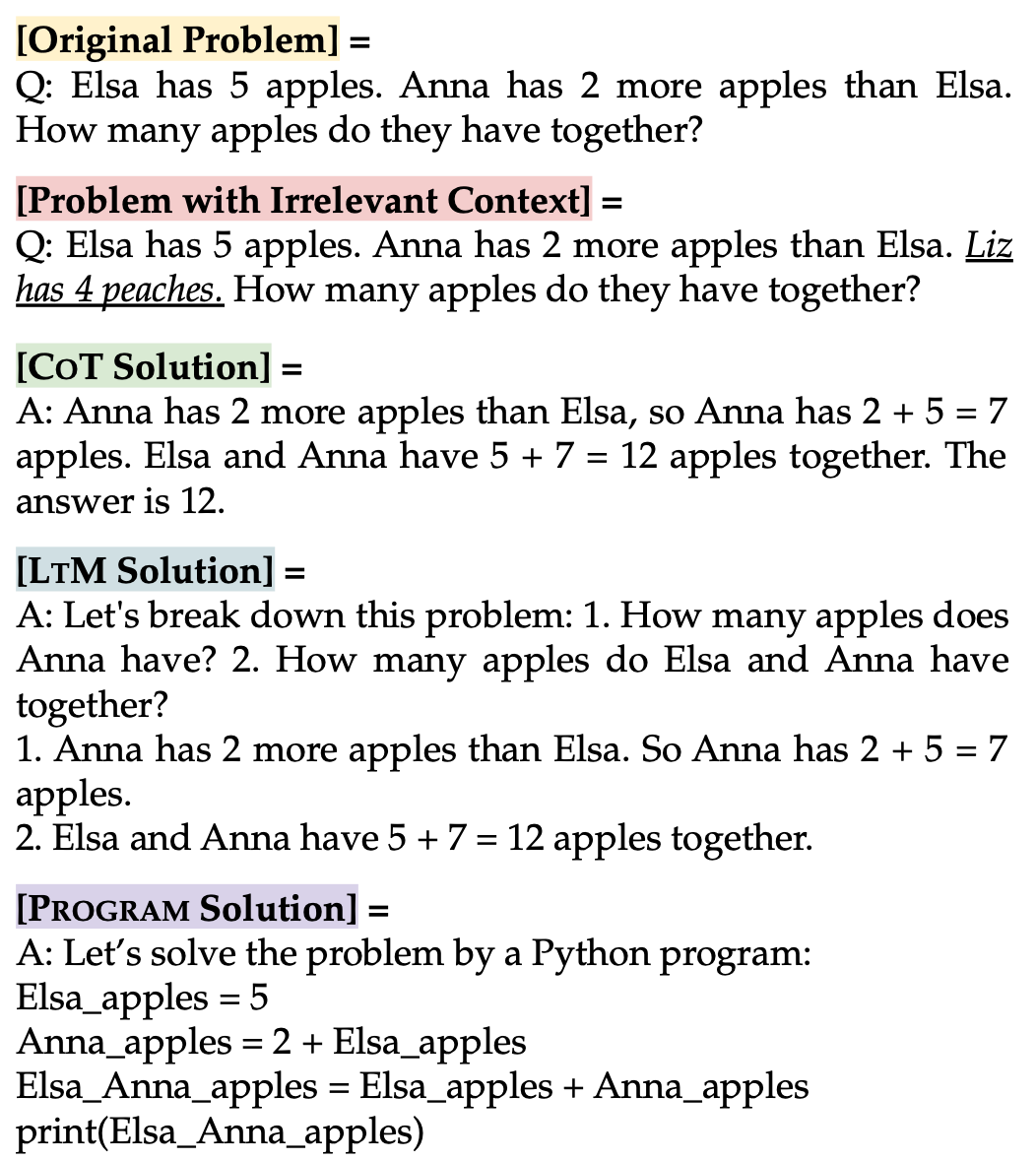

The researchers would take normal prompts and edit them to include an irrelevant sentence somewhere. There were a few different types of irrelevant sentences that were injected.

Multiple prompt engineering methods were tested across this dataset of prompts with irrelevant sentences injected.

Specifically, they tested:

Few-shot prompting. They did this in a cool way. They used examples that had irrelevant information in them, mimicking the types of inputs that were to be processed

Instructed prompting (directly instructing the model to “feel free to ignore irrelevant information in the problem description”)

Chain of Thought prompting

Here are a few examples of how the original prompts were transformed to follow the prompt engineering methods listed.

Experiment results

Here are the main takeaways:

Performance dropped across all models when irrelevant information was added (no surprise there).

Less than 30% of problems were solved consistently with the irrelevant sentences present.

Least-to-Most prompting and Self-Consistency prompting were most effective in handling irrelevant context. For info on how to implement these methods, check out our guide below

Creating prompt chains to implement self-consistency prompting and least-to-most prompting

Today’s post is going to be a little bit different. Rather than go deep into a single recent research paper, we’ll focus on two prompt engineering methods and ways to implement them.

Few-shot prompting with irrelevant context improved model robustness. This demonstrates a key insight about few-shot prompting: show don’t tell.

Using distractor sentences in the few shot examples didn’t degrade performance on datasets that didn’t have irrelevant sentences.

Conclusion

Using LLMs in production comes with a variety of challenges. One of them being that you never know what users might pass along in the prompt. One method to prepare for this is testing your prompts at scale using some form of batch testing. Another, non-mutually exclusive step, would be to use a prompt engineering method like S2A (TEMPLATE LINK) to sanitize the prompt before passing it along for processing.

Having safeguards in place is a great best practice for anyone using LLMs in production.

This is very helpful. Irrelevant context is one of the biggest issue that we used to face.