I love it when we get prompt engineering guidance from the model providers. Last week we went over OpenAI’s prompt engineering guidance for GPT-4.1 and now we’re turning to Google.

They recently (late Feb) released a 68-page Prompt Engineering white paper. I’ve read through it multiple times and put out some content about it on our Youtube channel earlier this week.

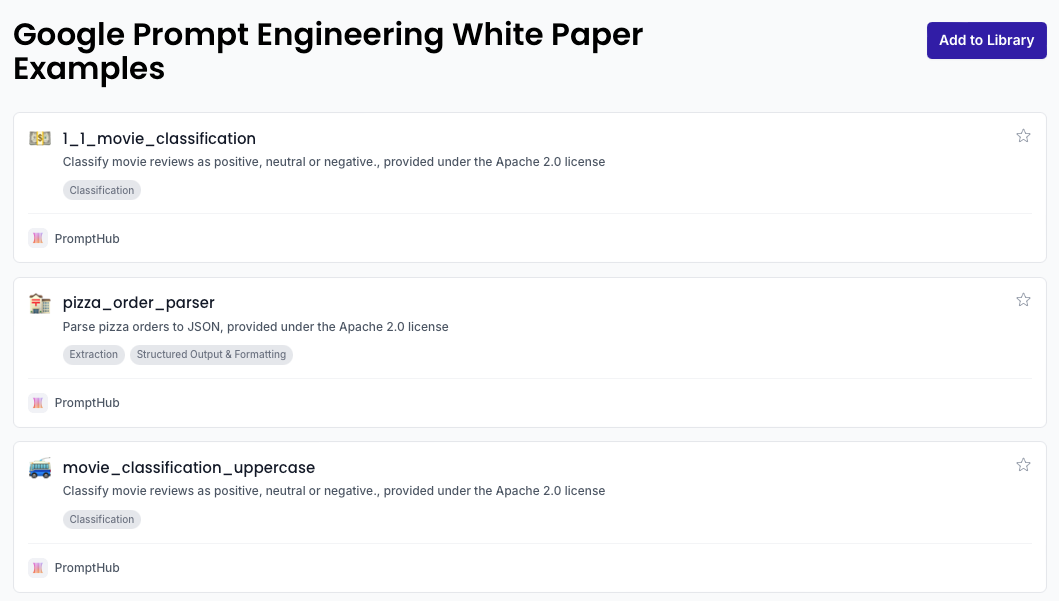

All prompt templates available in PromptHub

Before jumping in, all the prompt template examples from the white paper are available for free in PromptHub here!

Top Prompt Engineering Best Practices

Below are the best practices I pulled out from the white paper. For more information about model parameters, prompt engineering methods, and other info from the white paper, check out our full guide here: Google’s Prompt Engineering Best Practices.

Provide high-quality examples

One-shot or few-shot prompting teaches the model exactly what format, style, and scope you expect. Adding edge cases can boost performance, but you’ll need to watch for overfitting!

Start simple

Nothing beats concise, clear, verb-driven prompts. Reduce ambiguity → get better outputs.

Be specific about the output format

Spell out structure, length, and style (“Return a JSON list of action items with task, owner, and dueDate fields”).

Use positive instructions over constraints

“Do this” >“Don’t do that.” Reserve hard constraints for safety or strict formats.

Use variables

Parameterize dynamic values (names, dates, thresholds) with placeholders for reusable prompts.

Experiment with input formats & styles

Try tables, bullet lists, or JSON schemas—different formats can focus the model’s attention.

Continually test

Re-run your prompts whenever you switch models or new versions drop; As we saw with GPT-4.1, new models may handle prompts differently!

Collaborate with your team

Share, review, and fork prompts together (cough, cough in PromptHub) to catch edge cases faster.

Chain-of-Thought tips

Keep “Let’s think step by step…” simple and include a one-shot demo if possible.

Document every iteration

Log versions, parameters, and results in PromptHub (or a shared sheet) for easy rollback and auditing.

Wrapping up

The white paper is a great resource for beginners and even goes over some complex topics related to LLMs and prompt engineering.

If you’ve been prompting for awhile, it will probably feel a little basic. Either way, great to see Google putting something like this out, as they continue to kill it on the model front!