GPT-4.1 Is Here: Fast, Cheap, and Hyper-Literal

Plus, some prompting best practices

OpenAI just dropped the GPT-4.1 family—three API-only models with a 1 million-token window and price cuts up to 85% versus GPT-4o. Here’s what you need to know.

Meet the Family

GPT-4.1 comes in three sizes Nano, Mini, and Base. This gives you the freedom to choose base on cost versus capability.

Nano: $0.004 / 1K tokens (~75% cheaper than Mini), ideal for simple classification.

Mini: Often matches—or even beats—Base on core benchmarks at lower latency and cost.

Base: Top tier for multimodal, complex reasoning, coding at $0.15 / 1K tokens.

GPT-4.1 Model Performance

Compared to GPT-4o, GPT-4.1 performs better across almost all benchmarks. All three variants all scored perfectly on OpenAI’s needle in a haystack experiment.

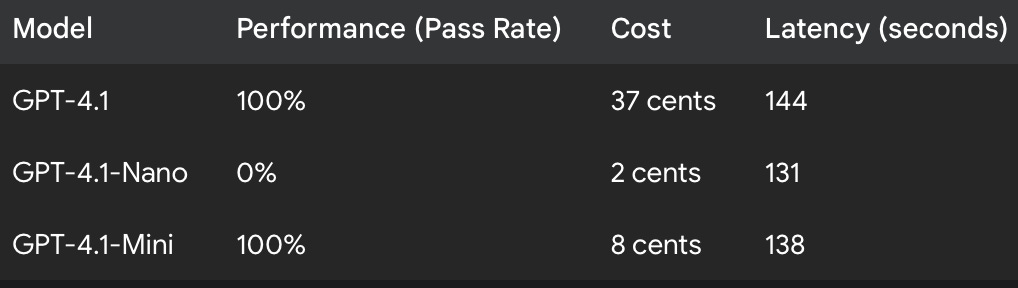

Needle in a haystack

BUT - I ran my own needle in a haystack experiments, and even with a really small number of runs (10) I was able to trigger some failures for GPT-4.1 Nano.

For reference, my needle in a haystack experiment is a prompt that has three Great Gatsby’s embedded and one of the three versions has a needle (“Dan surfs in Portugal”) inside.

The biggest thing that stood out was that latency was very similar across all three models—even though Nano was pitched as much faster than Mini, and Mini faster than Base. And none of them were exceptionally fast compared to their peers: Google’s Gemini 2.0 Pro processes the needle-in-a-haystack prompt in 15 seconds, and Gemini 2.0 Flash does it in just 6 seconds.

If you’re interested in running my needle in a haystack experiments, here is a video that will show you how to:

Other evals

The GPT-4.1 models were trained specifically to be good at:

Following instructions

Handling long context

Function calling

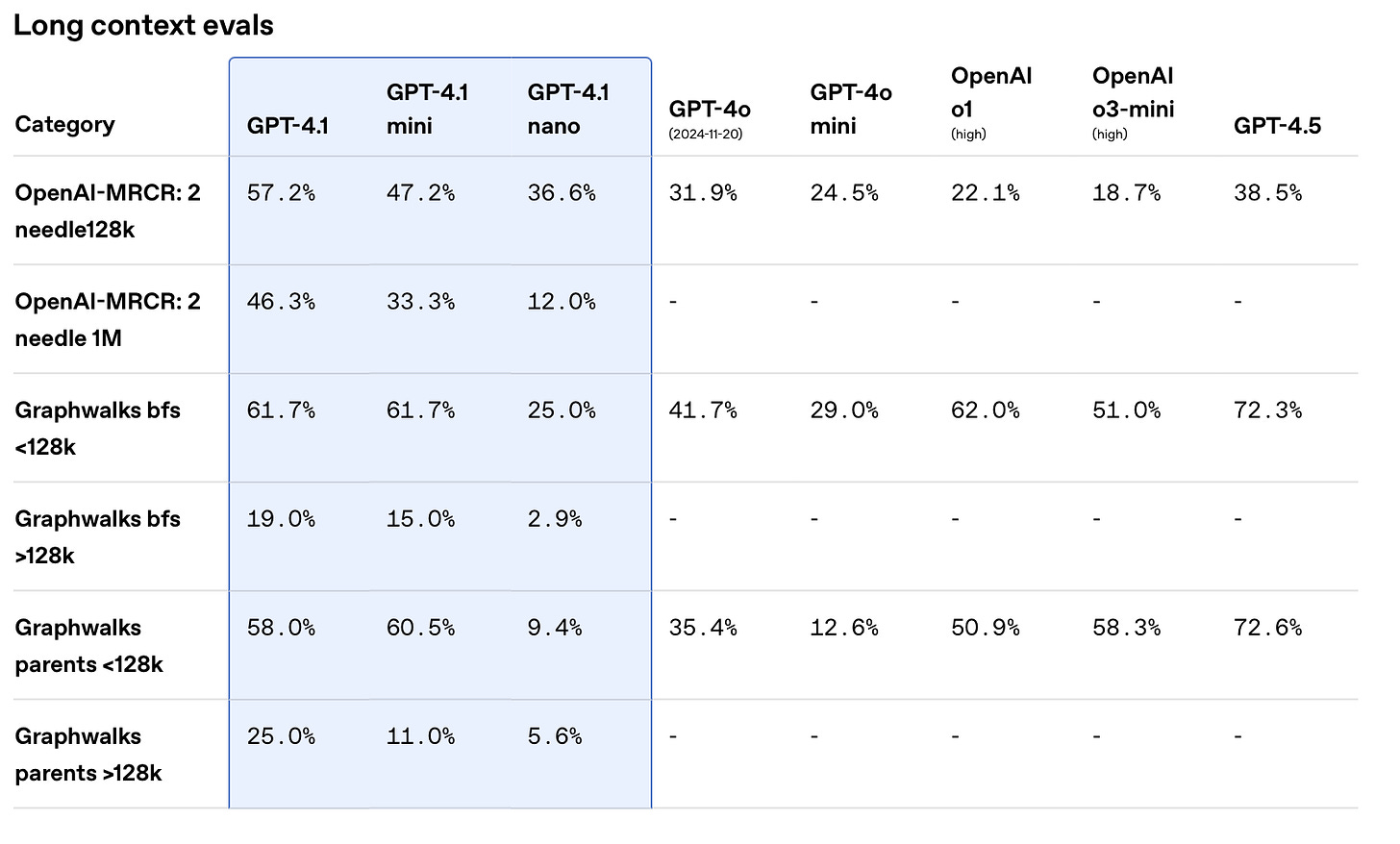

Here’s how they stacked up against other OpenAI models across these types of tasks:

GPT-4.1 outperforms 4o by a lot, and comes close to o3-mini and o1 in some benchmarks.

On average and compared to models like o1 and p3-mini, GPT-4.1 does better on long context evals than instruction evals. GPT-4.1 really shines here and you can tell they must’ve spent a lot of timing training on longer context sizes.

Same situation here where GPT-4.1 is essentially better or on par with o1 and o3-mini across all benchmarks.

Prompting engineering best practices for GPT-4.1

My favorite part about this model launch is that OpenAI also released a prompting guide for the 4.1 model family. I did a whole run down in my most recent video, but here are the highlights.

Best practices like few shot prompting still apply (unlike how reasoning models have their own set of best practices).

GPT-4.1 models follow instructions much more literally. This could be a double-edged sword. You’ll most likely need to update prompts that you used for GPT-4o.

These models are really good at using tools, but don’t forget to spend some time writing good tool names and descriptions. Give the models example of tool usage if your tools are very complicated.

When dealing with large contexts, put your instructions both before and after the provided context.

GPT-4.1 models are not reasoning models. If you want them to reason you may need to use some Chain of Thought prompting.

Wrapping up

If you’re interested in GPT 4.1, I’d recommend running the prompts you used for GPT-4o side-by-side against 4.1 to see how they stack up. This type of audit and experimentation is pretty important given how different these models behave.

Overall, I’ve been enjoying these new models and GPT-4.1-Mini seems to be the real winner here in terms of cost/performance ratio.