AI agents are here. Whether it be coding agents like Bolt.new, or research agents like OpenAI’s Operator, current LLMs are making agents possible.

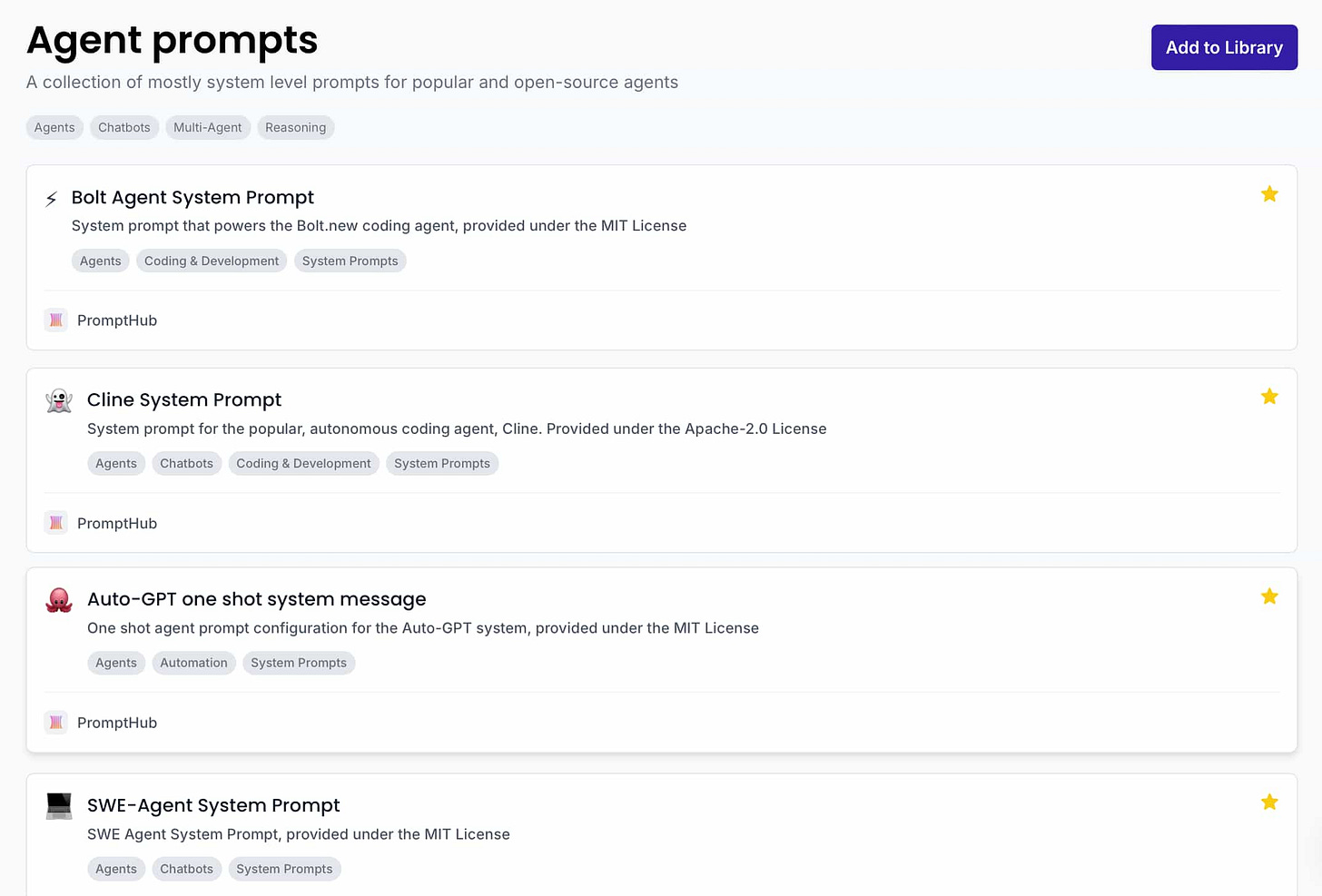

I recently analyzed and created a collection of over 20+ prompts from popular open-source agents like Cline, Bolt, Auto-GPT, and more. In this post, we’ll run through some quick information about the core components of an agent, and then dive deep into the system instructions that power Cline. For more details and our analysis of Bolt’s system instructions, you can check out the full post on our blog here.

Core components of agents

Here are a few of the core components to think about when building agents:

Memory: LLMs are stateless, they don’t remember past interactions unless programmed to. Memory management methods include tracking message history and using prompt caching to reduce latency and cost.

Tools: Agents rely on tools to interact with external systems, like search engines or databases. Well-documented tools (name, description, etc.) improve usability, much like clear documentation benefits developers.

Planning: Effective agent planning is key. Reasoning models make planning easier with built-in chain-of-thought reasoning, but agents must also adapt and re-plan after failed attempts

Cline system prompt

Cline is an open-source VS Code based coding agent you can access in your IDE. The system message is over 10,000 tokens ( you can check out the full system message here).

Let’s dive into what makes the Cline system prompt great

Well documented tools

You should spend a lot of time iterating and accurately writing out your tool names and descriptions. The better they are, the better chance the model has at using them correctly. Cline does a great job with detailed descriptions

# Tools

## execute_command

**Description:**

Request to execute a CLI command on the system. Use this when you need to perform system operations or run specific commands to accomplish any step in the user's task. You must tailor your command to the user's system and provide a clear explanation of what the command does. For command chaining, use the appropriate chaining syntax for the user's shell. Prefer executing complex CLI commands over creating executable scripts, as they are more flexible and easier to run. Commands will be executed in the current working directory: `${cwd.toPosix()}`

**Parameters:**

- **command (required):**

The CLI command to execute. This should be valid for the current operating system. Ensure the command is properly formatted and does not contain any harmful instructions.

- **requires_approval (required):**

A boolean indicating whether this command requires explicit user approval before execution (in case the user has auto-approve mode enabled).

- Set to `true` for potentially impactful operations (e.g., installing/uninstalling packages, deleting/overwriting files, system configuration changes, network operations, or any commands that could have unintended side effects).

- Set to `false` for safe operations (e.g., reading files/directories, running development servers, building projects, and other non-destructive operations).

**Usage Example:**

```xml

<execute_command>

<command>Your command here</command>

<requires_approval>true or false</requires_approval>

</execute_command>

User confirmation

The Cline system prompt restricts the agent from using more than one tool per message and always makes it go back to the user for confirmation before going forward. These short check-ins are really helpful in terms of keeping the model on the correct path.

6. ALWAYS wait for user confirmation after each tool use before proceeding. Never assume the success of a tool use without explicit confirmation of the result from the user.

Planning and taking action

I thought this one in particular was really cool. The system prompt lays out two modes for Cline: PLAN MODE AND ACT MODE.

This explicit distinction helps the model understand its current context and how it should act.

## What is PLAN MODE?

- While you are usually in ACT MODE, the user may switch to PLAN MODE in order to have a back and forth with you to plan how to best accomplish the task.

- When starting in PLAN MODE, depending on the user's request, you may need to do some information gathering e.g. using read_file or search_files to get more context about the task. You may also ask the user clarifying questions to get a better understanding of the task.

- Once you've gained more context about the user's request, you should architect a detailed plan for how you will accomplish the task.

- Then you might ask the user if they are pleased with this plan, or if they would like to make any changes. Think of this as a brainstorming session where you can discuss the task and plan the best way to accomplish it.

- Finally once it seems like you've reached a good plan, ask the user to switch you back to ACT MODE to implement the solution.

Great use of delimiters

It’s a small thing, but an important one.

Even though the system prompt is very long, it is easy to scan because it is broken up nicely with delimiters. They use “====” a line break, and an all-caps header.

====

OBJECTIVE

Choosing the right tool

Vertical agents often have tools that are very similar, or rely on similar data sources or functions. This makes it all the more important to have detailed descriptions for tools. Cline’s system message takes it another step further with instructions on how and when to choose the appropriate tool.

# Choosing the Appropriate Tool

- **Default to replace_in_file** for most changes. It's the safer, more precise option that minimizes potential issues.

- **Use write_to_file** when:

- Creating new files

- The changes are so extensive that using replace_in_file would be more complex or risky

- You need to completely reorganize or restructure a file

- The file is relatively small and the changes affect most of its content

- You're generating boilerplate or template files

Clear objectives and reasoning

We’ve all seen how powerful LLMs can be when they leverage test time compute to “think” before giving an answer. While you typically don’t need to prompt a reasoning model to do chain of thought reasoning, you still need to do so with older models. For more info about prompt engineering with reasoning models, check out our guide here.

OBJECTIVE

You accomplish a given task iteratively, breaking it down into clear steps and working through them methodically.

1. Analyze the user's task and set clear, achievable goals to accomplish it. Prioritize these goals in a logical order.

2. Work through these goals sequentially, utilizing available tools one at a time as necessary. Each goal should correspond to a distinct step in your problem-solving process. You will be informed on the work completed and what's remaining as you go...

For our analysis of Bolt’s system message, check out our article here.

Wrapping up

Good prompts and tools are at the core of any good agent. I hope this collection of agent-related prompts is helpful! If so, please let me know and we’ll put more collections like this together.