Using LLMs to generate in-context learning (ICL) examples

Can LLMs generate examples that outperform labeled examples from a curated dataset? Plus, how many examples to use in your prompt, and a free template to get started.

Hey, Dan here.

If you’ve been praying for an article on auto-generating in-context learning examples, you’re in for a treat. If not, I promise you’ll still get tangible takeaways that will help you get better outputs from LLMs. Enjoy!

Adding examples to your prompts, also known as in-context learning (ICL), is one of the best ways to boost performance.

If those examples also include the reasoning steps (also known as Chain of Thought) between the questions and the answer, even better.

For creative writing, the examples help the model learn your style, tone, and vocabulary choice.

For tasks like classification or problem solving, ICL helps the model understand nuances and output style.

For example, let’s say you want to classify movie reviews. Using ICL, here is what your prompt might look like:

This is awesome! // Positive

This is bad! // Negative

Wow that movie was rad! // Positive

What a horrible show! //

The challenging part with ICL is collecting, labeling, and organizing examples to use. But, this is a prompt engineering Substack after all, can’t we use an LLM to generate these examples?

Of course!

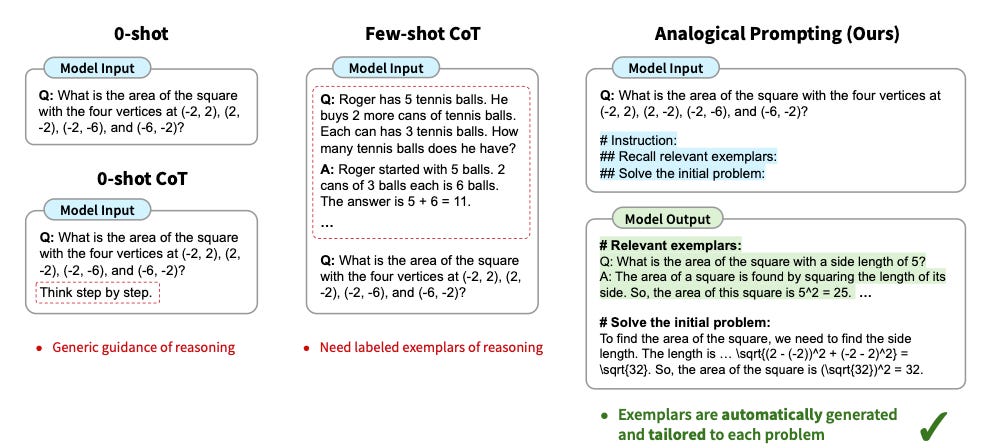

We’ll leverage a prompt engineering method called Analogical Prompting (AP). AP comes to us from a Google + Stanford paper: Large Language Models as Analogical Reasoners.

What problem does Analogical Prompting solve?

Analogical Prompting is designed to streamline the process of including CoT examples in your prompt. Rather than needing to get your own examples together with questions, rationales, and answers, AP generates it all for you.

AP does this all with a single prompt, making it extremely efficient. Other Auto-CoT methods rely on multiple steps to dynamically select examples based on the task, usually by using an embeddings model to assess the cosine similarity between the task and the examples. While this can lead to better examples, it requires additional set up and upkeep.

How Analogical Prompting works

Analogical Prompting is grounded in the concept that LLMs have enough knowledge from their pre-training data to generate examples for a wide range of tasks.

Putting AP into practice is extremely simple, requiring only a single prompt template. Here is what the prompt template looks like:

Prompt Template:

# Problem: [x]

# Instructions

## Tutorial: Identify core concepts or algorithms used to solve the problem

## Relevant problems: Recall three relevant and distinct problems. For each problem, describe it and explain the solution.

## Solve the initial problem:

Let’s break it down

AP first prompts the model to “Identify core concepts…”. This prompts the model to consider the broader context before tackling the specific task at hand.

This type of prompting is really similar to Step-Back Prompting. Thinking broadly before generating a response helps the model specifically on challenging tasks like code-generation. If your task is easier than code generation, then you could omit the Tutorial line.

Next, Analogical Prompting pushes the model to self-generate the ICL examples (“Relevant problems”). This eliminates the need for a human to do any of the labeling, data retrieval, or organization.

Lastly, the model generates an answer.

Important to note that the model is generating all the tokens for the example, rather than these examples being pre-fed as part of the prompt. By enabling the model to generate both the examples and the solution, it can utilize the generated examples more effectively for its responses. However, this also means you’ll be using more output tokens, which will probably increase your bill.

Three and relevant and distinct are two important components of the template.

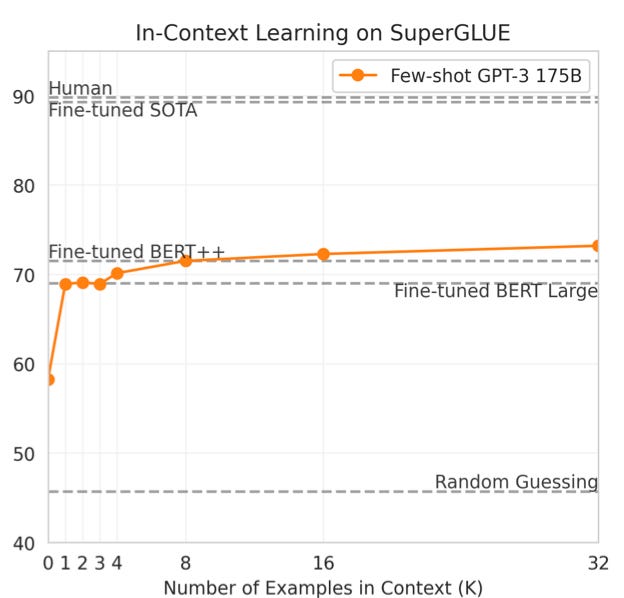

Providing 3-5 examples seems to be the sweet spot, regardless of the task you are working on. Any less, and you’re missing out on potential performance gains and could lead the model to overfit based on small sample size. On the other end, adding more examples can lead to diminishing returns.

Relevant and distinct examples are important because you want to cover a wide range of potential solutions, while preventing overlap among the examples.

Here’s an example of Analogical Prompting:

Putting Analogical Prompting to the test

Analogical Prompting was tested across various datasets, using GPT-3.5-turbo, GPT-4 and PaLM 2-L.

AP was tested against a few methods:

0-shot prompting: Just a normal prompt

0-shot CoT: “Solve the following problem, think step by step”

Few-shot CoT: Few-shot CoT with a fixed number of examples (between 3 and 5)

Few-shot retrieved CoT: Rather than using a static set of examples, examples are retrieved dynamically

Let’s look at some tables:

Takeaways:

Across most of the datasets, AP outperforms all other methods

On the Codeforces dataset, adding the knowledge generation step (“## Tutorial: Identify core concepts or algorithms used to solve the problem”) led to a big boost in performance

The larger the model (and the pre-training data), the better AP performs

Putting Analogical Prompting into practice

I really like this prompting method because it is extremely easy to implement. It eliminates the need to find relevant examples, label them, and retrieve them at run time.

If you want to test out AP, we put together a template in PromptHub that you can use here. Test it against your current prompt and see how the outputs differ!

Happy prompting,

Dan