Here is a typical API request to OpenAI:

'{

"model": "gpt-4o",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}'

The first configurable parameter in the request is the role. Unfortunately there is little guidance from any the model providers in regard to how the roles actually work.

I spent some time researching and running tests to pull together a short and sweet list of best practices. Enjoy!

System messages use cases

Generally speaking, if you’re going to use a system message, in a non-chat application, then you should use it to provide high-level, contextual, information.

Here are a few use cases for the system message:

Setting the role ("Respond as a nutritionist.")

Providing context or instructions ("Use layman's terms.")

Guiding model behavior ("Avoid technical jargon.")

Controlling output format and style ("Reply in bullet points.")

Establishing content boundaries ("Do not provide financial advice.")

User messages use cases

If the system message is where the high-level information lives, the user message (also referred to as the ‘prompt’), is where the low-level details should go.

Here are a few use cases for the system message:

Specific question ("What are healthy breakfast options?")

Specific contextual info ("For someone with a nut allergy...")

Directing the immediate focus("Focusing on low-carb diets...")

Details related to question/task ("Considering a 30-minute meal prep time...")

Structuring response requirements ("List ingredients followed by preparation steps.")

The Advantages of Using Multiple Smaller Prompts

We're strong believers that each prompt should focus on one task and excel at it. Breaking down complex prompts into a series or chain of simpler prompts is an easy way to boost performance. If you're interested in learning more about prompt chaining, check out our guide: Prompt Chaining Guide.

Using multiple focused prompts to convey both high-level instructions and specific queries offers several benefits:

Clarity and Understanding: Prompt performance tends to improve when each prompt includes a single, clear instruction. Separating overarching guidelines from specific tasks leads to prompts that are easy to follow and understand.

Optimized Structure: Leveraging the roles provided by the LLM enhances how information is processed, making full use of their intended design.

Ease of Iteration: Smaller prompts make it simpler to identify areas for improvement and iterate effectively, leading to better results over time.

System message examples

Here are a few resources if you want to see some system messages in action:

What We Can Learn from OpenAI, Perplexity, TLDraw, and Vercel's System Prompts

System Messages: Best Practices, Real-world Experiments & Prompt Injections

Last but not least is the OpenAI System Instructions Generator template, that I was able to get via a prompt injection.

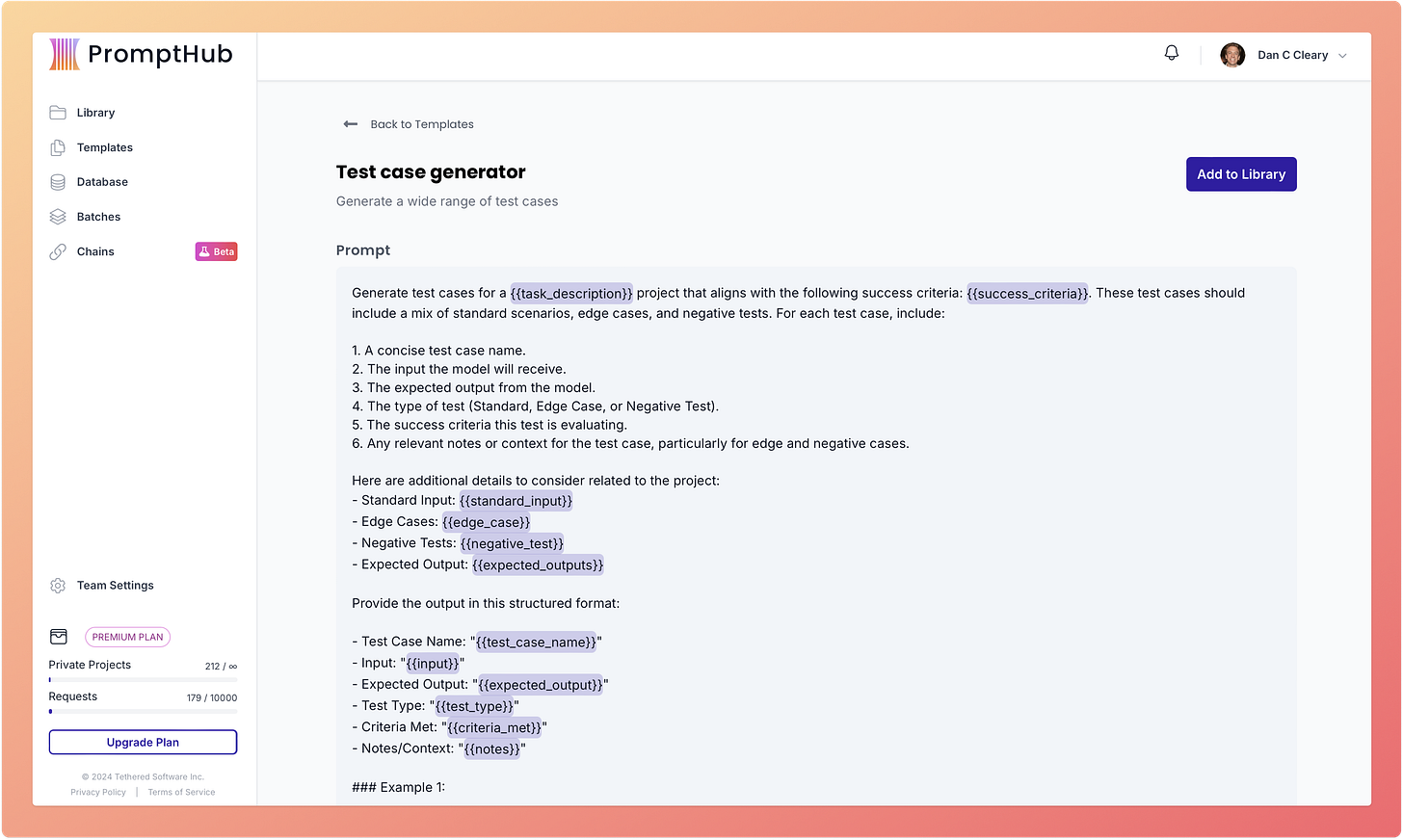

Template of the week

Testing your prompts across a variety of test cases is important. This prompt will generate examples automatically.

Two other papers I’m reading

What is the Role of Small Models in the LLM Era: A Survey

It’s unclear what the future model landscape will look like and what role SMs will play, but this paper is a good starting point.

This paper looks at the role of small models (SMs) in the era of Large Language Models (LLMs). It explores how SMs complement LLMs in areas like resource efficiency, domain-specific tasks, and interpretability while also competing in contexts requiring lower computational demands or greater transparency.

This paper dives into how external data can enhance LLM performance, reducing hallucinations while boosting domain expertise and response quality. It introduces a categorization of RAG tasks, classifying user queries into four levels based on complexity and data needs.