After Google dropped Gemini 2.5 Pro with a 2 million token context window I’ve been diving deep into all things related to long context windows. Here are a few pieces I’ve put together recently:

Just a week or so later Meta dropped Llama 4 Scout which boasts a 10 million token context window.

And just this week, OpenAI dropped their first models that have a 1 million token context window.

So if we have these massive context windows, do we really need RAG? If context windows continue to increase, and latency and token costs decrease, should we just pass all the context through in the prompt and cache it via Cache-Augmented Generation?

RAG vs. CAG

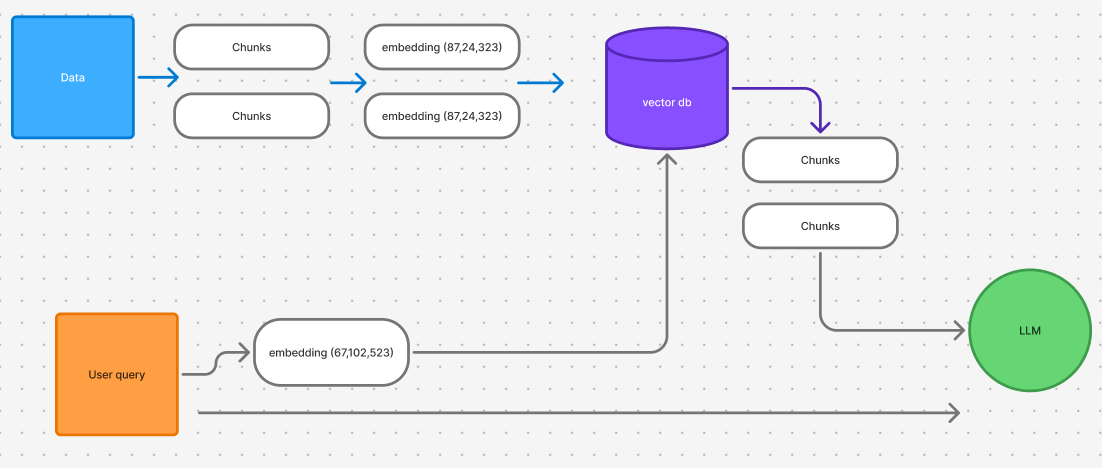

Traditionally, RAG involves a few steps:

Data Chunking and Embedding: Documents are split into smaller chunks, each transformed into semantic vectors.

Vector Database Storage: These vectors are stored in a vector DB.

Dynamic Retrieval: When a query comes in, the system pulls the most relevant chunks from the vector DB.

Prompt Formation: The retrieved context is concatenated with the query for the language model.

While RAG is great for dynamically updating content and managing token costs, its multiple steps can add complexity and latency.

Cache-Augmented Generation (CAG)

With CAG, the entire reference text is sent to the model and cached ahead of time. For open-source models you can cache the data in the key-value (KV) store—so that when a user query arrives, it simply appends to the precomputed context.

For closed models, you’ll have to rely on the caching mechanisms provider by the company themselves. Everyone works slightly differently, for info on OpenAI, Anthropic, and Google, check out our post here.

CAG eliminates the on-the-fly retrieval process.

Pros: Faster inference times and robust context availability.

Cons: The caching process can be resource-intensive, and the approach is limited by the maximum context window size.

What the Experiments Show

Data below are from experiments from these papers:

Don’t Do RAG: When Cache-Augmented Generation is All You Need for Knowledge Tasks

Retrieval Augmented Generation or Long-Context LLMs? A Comprehensive Study and Hybrid Approach

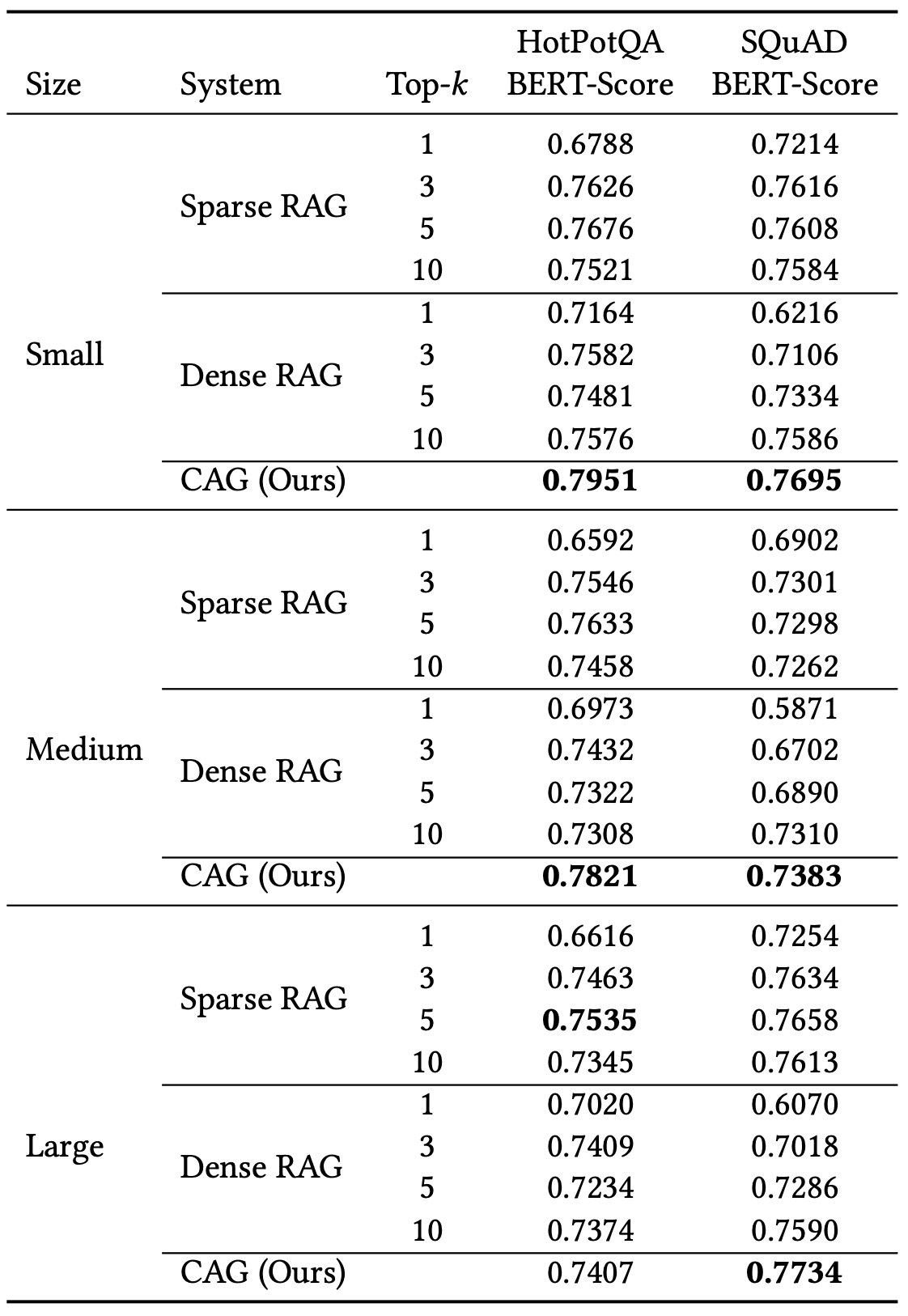

Both papers essentially tested RAG vs CAG. Here are some interesting tables and graphs.

CAG outperforms RAG in almost every experiment variant

The performance gap between CAG and RAG narrows slightly as the size of the context passed increases

CAG generation time is much lower since it doesn’t need to do any retrieval

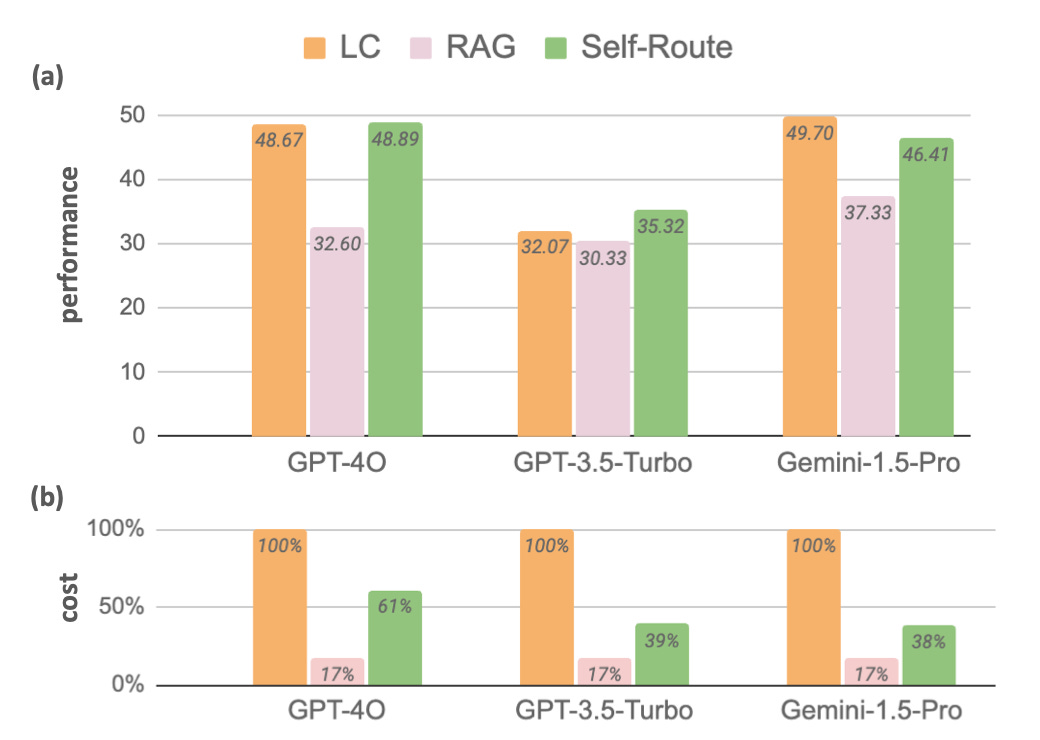

Again, CAG (LC=Long Context) consistently outperforms RAG

CAG is more expensive than RAG in this representation because it is comparing the first request, where you would have to cache the whole context provided.

Common Failure Modes for RAG:

The study also mentioned four common reasons why RAG fails on certain queries.

Multi-step Reasoning: Struggles with linking multiple facts.

General Queries: Vague questions made it hard for the retriever to get relevant chunks

Complex Queries: Deeply nested or multipart questions cause confusion for the retriever

Implicit Queries: Questions that require reading between the lines—like inferring causes in a narrative—tend to be problematic.

The Trade-Offs

Both approaches carry their own benefits:

RAG is ideal when your data updates frequently. Its modular design isolates data processing, making testing easier. However, this method brings additional complexity and multiple potential failure points.

CAG provides a streamlined, rapid-response solution, best suited for applications where the data remains relatively static. It’s simpler to set up, cost-effective, and fast—assuming you have an effective caching strategy.

Looking Ahead

While the experiment results look promising, and the needle-in-a-haystack data from model providers look amazing, how it actually works for me and you is always a different story. Next up I’ll dive deep into how performant the models are when using a large portion of their context window.