Prompt Engineering vs Fine-tuning

When to use each method, how they stack up head-to-head, and the latest research

During our monthly prompt engineering 101 sessions, I begin with a slide that talks about the various ways to get better outputs from LLMs.

We recommend teams start with prompt engineering, as it's the quickest way to get started and will help identify areas for improvement. For example, if the model consistently struggles to follow instructions, fine-tuning may be the next step.

Let’s dive into prompt engineering vs. fine-tuning: when to use each method, which is 'better,' and a review of some of the latest research.

Prompt engineering vs fine-tuning

Let’s do some some quick definitions.

Prompt engineering

Improving inputs to get better outputs from an LLM

Pros:

Quick to get started: all you need is access to a tool like PromptHub

Cost-effective: Requires fewer resources up front compared to fine-tuning

No additional training needed

Extremely flexible: Can easily adapt to new tasks or changes in requirements without altering the underlying model

Cons:

Limited Depth: May not handle complex scenarios that require deep domain knowledge as effectively as fine-tuning.

Consistency Issues: Can have high variability in output quality

Dependent on Quality of Prompts: Somewhat reliant on how good your prompt engineering skills are

Fine-tuning

Taking a pre-trained model, like GPT-3.5, and training it further with new data that you’ve acquired or generated.

Pros:

Enhanced Accuracy: Can increase accuracy on specific tasks

One-time Cost: Generally only need one fine-tuning session to get a majority of the benefits

Cons:

Resource Intensive: Requires significant computational resources (data) and time to further train the model.

Risk of Overfitting: If not carefully managed, fine-tuning can lead the model to overfit on the training data, reducing its effectiveness on general or unseen data.

Cost: Potentially expensive, especially when using large datasets

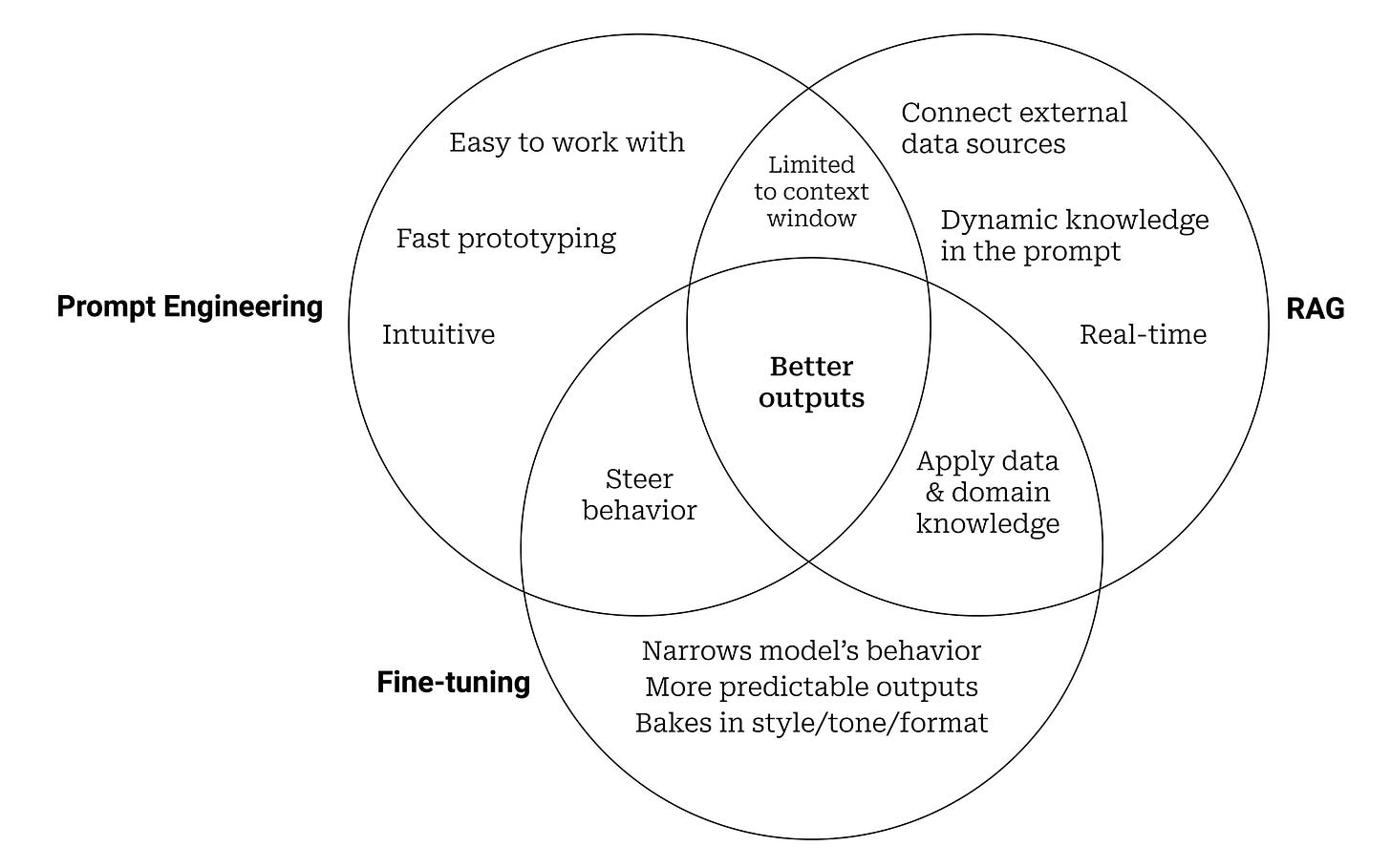

So, which is better? It’s a question that doesn’t have a binary answer. Both methods can help you get better outputs from LLMs and both have their pros and cons. The methods also have an additive effect. They can build on each other and produce really accurate results when used in conjunction.

Here’s a visual way to think about it.

We’ll look at two papers that arrive at wildly different conclusions on the prompt engineering vs fine-tuning debate.

Paper #1: MedPrompt

Early in the fall in 2023, Microsoft released a very influential paper: Can Generalist Foundation Models Outcompete Special-Purpose Tuning? Case Study in Medicine

I loved this paper for a few reasons, which is why we did a deep dive about it on our blog (Check it out here).

The paper was a direct challenge to Google, pitting their fine-tuned model, Med-Palm 2, against Microsoft's GPT-4 enhanced with a prompt engineering framework (Medprompt)

They released a very detailed prompt engineering framework

The foundational model + prompt engineering won! Great news for the prompt engineering world

In the end, GPT-4 + Medprompt was able to achieve state-of-the-art performance across 9 benchmarks.

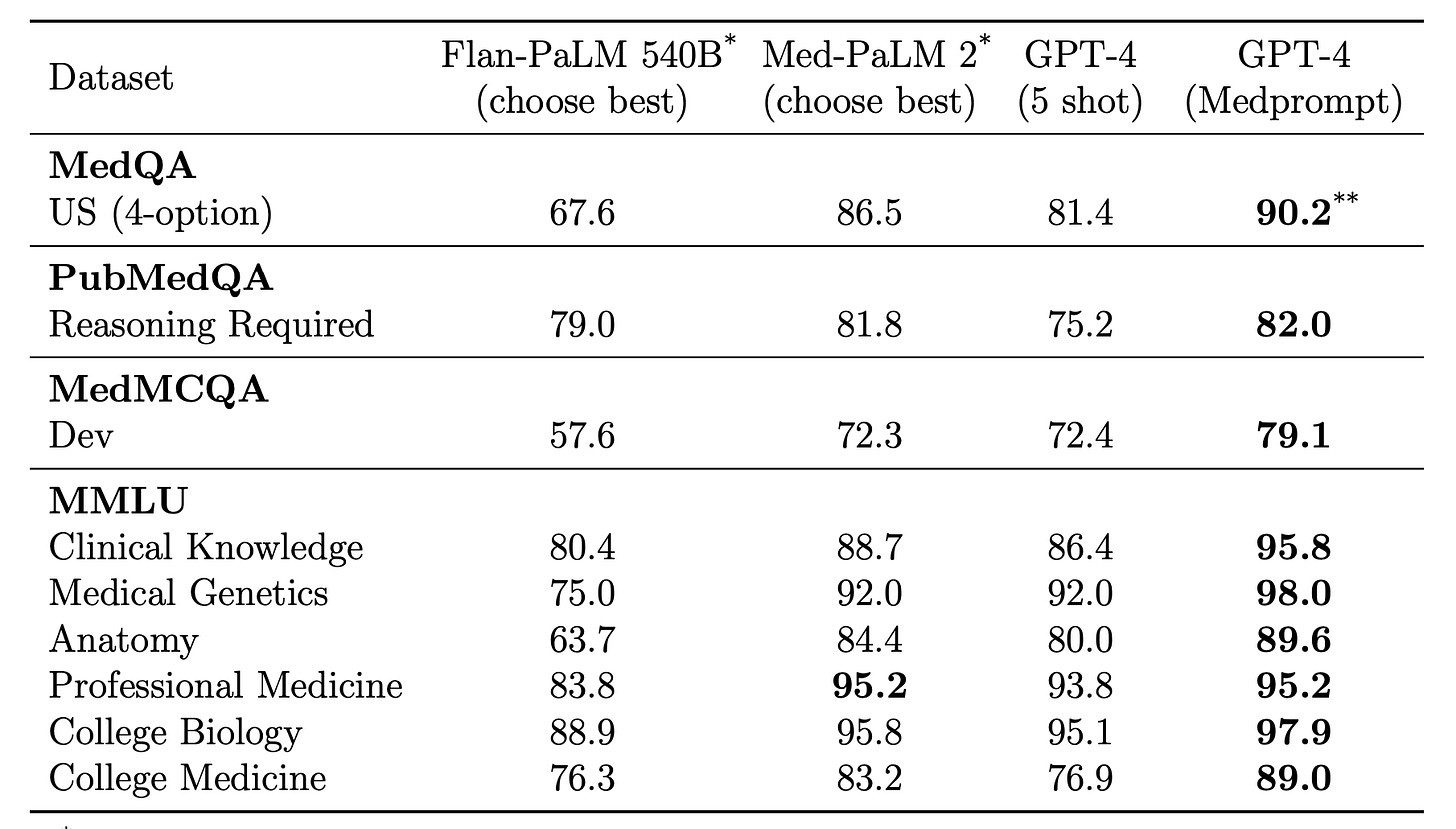

Here are the results:

Unfortunately the study didn’t test Med-Palm 2 used in conjunction with the Medprompt framework. This is a Microsoft paper after all, so I wouldn’t expect to see them try to help the Google model in anyway.

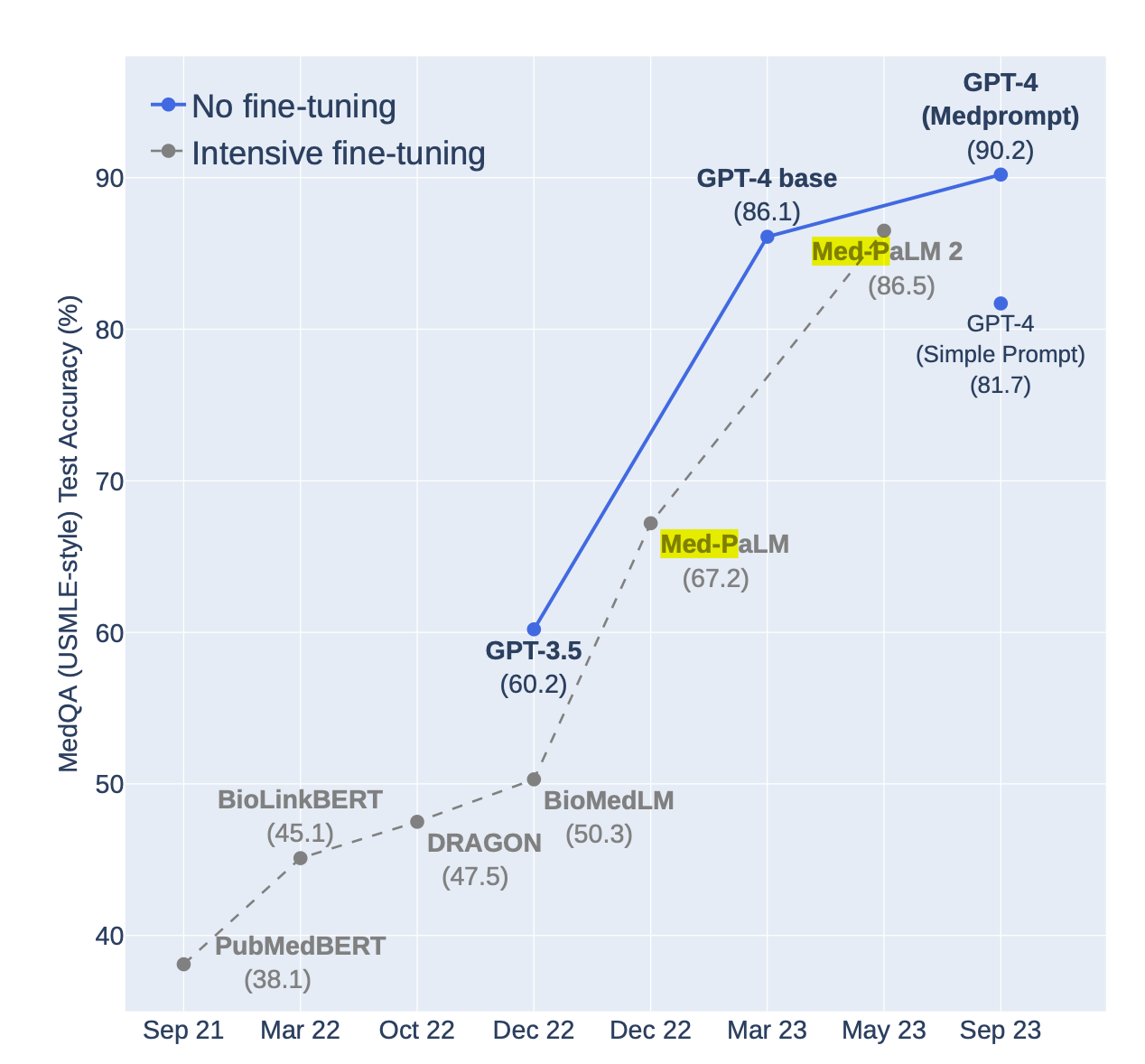

Here’s a telling graph as well:

From reading the graph above, you may come to the conclusion that fine-tuning is becoming less important as the foundational models are getting better. I would agree and would argue that proprietary datasets aren’t super valuable because of the sheer amount of data that is available on the internet.

Although foundational models may not have access to your proprietary data, they have access to extensive data, enabling them to encounter very similar information or intelligently extrapolate to fill in the gaps.

So is fine-tuning dead? Nope! It just shouldn’t be seen as a way to add to the model’s knowledge. Rather, a more effective way to think about fine-tuning is with the goal of training the model on how outputs should look (structure) and sound (tone/style).

Additionally, fine-tuning can help save costs. You could take a relatively cheap model, fine-tune it based on your use case and get close to GPT-3.5 or GPT-4 performance. Those wins may be temporary as foundational models become less expensive.

Now let’s look at a pro fine-tuning paper

Paper #2: Code review

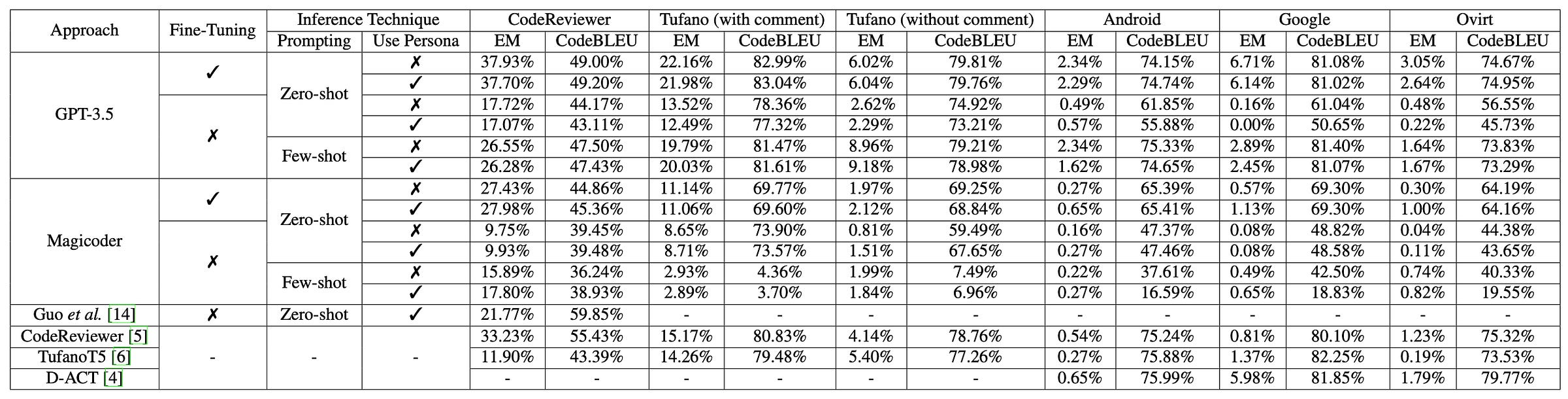

In May 2024, a research team from Monash University in Australia, published this paper: Fine-Tuning and Prompt Engineering for Large Language Models-based Code Review Automation

Here’s the rundown.

Experiment overview

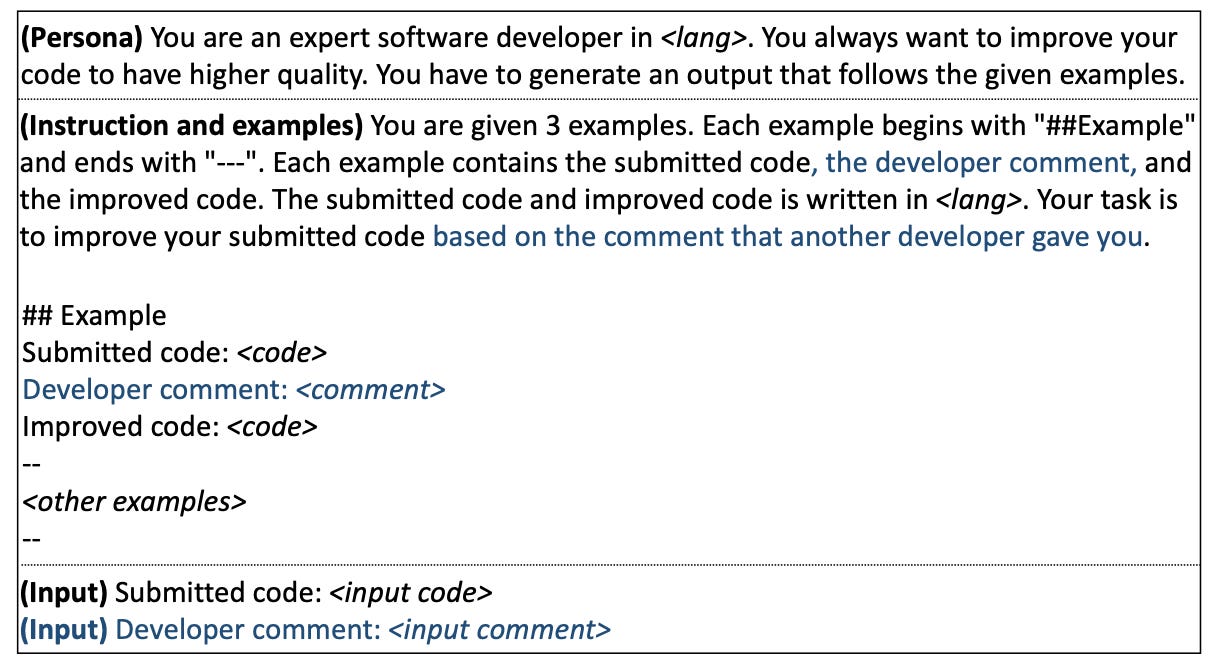

The goal was to evaluate the performance of LLMs on a code auditing task, comparing a fine-tuned model versus a foundational model with various prompt engineering methods applied.

Models:

GPT-3.5 and Magicoder

Methods:

Fine-tuning: The models were fine-tuned using a training set with pairs of the original code section and the revised code

Prompt Engineering methods: Simple prompting (zero-shot), few-shot prompting, and using personas in the prompts (“Pretend you’re a expert software developer in <lang>”)

Results

Here are the most interested takeaways

The top method was a fine-tuned GPT-3.5 with zero-shot prompting, which scored between 63.91% and 1,100% higher than the non-fine-tuned models.

Few-shot learning was the best performing prompt engineering method. It was able to outperform zero-shot prompting by 46.38% - 659.09%. This will come as no surprise if you read our recent Few Shot Prompting Guide

Including a persona led to a degradation in performance by a margin of ~1% all the way up to ~54%. This is the first case where I’ve seen the addition of a persona lead to worse performance

Now we’ve seen the other end of the spectrum. So is prompt engineering a waste of time? Should we just fine-tune our models and then use basic prompts? Nope! Here are a few reasons why:

Fine-tuning still has upfront costs and requires gathering/organizing data, which may be inaccessible for some teams

Some models can’t be fine-tuned. Having a basic understanding of prompt engineering will help across various models

The prompts used in this paper have room for improvement

Again, we don’t see the two methods used in conjunction!

Closing

Here’s how we think about the question of Prompt Engineering vs Fine-Tuning:

Start with prompt engineering and find what shortcomings you’re running into

It’s not an either/or question

The best outputs may come from a combination of methods

Fine-tuning with the goal of using proprietary data to teach the model new information (rather than steer the output structure os style), doesn’t seem viable as the foundational models continue to become smarter