Prompt engineering best practices for In-Context Learning

Plus, 2 other papers I'm reading and a new prompt template

A quick programming note: The Prompt Engineering Substack will now be sent weekly on Fridays, and each edition will include a few of the latest research papers I’m reading related to prompt engineering, plus a new prompt template.

We’re always tweaking things, so if there are any ways we can make this newsletter more helpful for you, just reply and let me know!

We just wrapped up a whole guide on In-Context Learning (ICL). Between that guide and our few shot prompting guide, I’ve read more than a dozen papers on how to include examples in prompts.

I'm going to distill all the best practices into a single, easy-to-scan list you can refer to whenever you're adding examples to your prompt.

In-Context Learning best practices

1. Use high-quality and relevant examples

This one probably goes without saying but the examples you use need to be high quality and relevant to the problem you’re asking the model to solve.

Spending some time ensuring your examples are high quality is well worth the small upfront commitment.

In the prompt below you’ll see the included example (in bold) is related to product feedback.

Good prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

The product works great! // Positive

The product doesn’t work for our use case //

In the prompt below, you’ll see the example (in bold) isn’t directly relevant to the product.

Bad prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

I wish the marketing events were more fun // Negative

The product doesn’t work for our use case //

2. Use a variety of example types

You want the model to "learn" as much as possible from the examples you provide. So, it's key that the examples are diverse and cover as much of the problem scope as possible.

The prompt below includes all three of the possible answer choices for our sentiment classifier (Positive, Negative, Neutral), helping the model understand how to handle each situation.

Good prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

The product works great! // Positive

The product doesn’t work for our use case // Negative

The product is alright I guess // Neutral

The product doesn't satisfy our requirements //

But it is important to also think about edge cases as well. What if the product feedback is completely unrelated or just a string of random characters? You could either update the instructions to guide the model on how to handle that situation, or include an example.

Better prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

The product works great! // Positive

The product doesn’t work for our use case // Negative

The product is alright I guess // Neutral

LeBron Jame is the best basketball player ever // Not applicable

The product doesn't satisfy our requirements //

The prompt below is not ideal because it only provides positive examples. This will skew the model toward generating this type of output, even in cases where the feedback is negative.

Bad prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

The product works great! // Positive

The product is awesome // Positive

The products fantastic // Positive

The product doesn't satisfy our requirements //

3. Consistent formatting

Formatting plays two roles when using in-context examples.

First, the examples themselves should follow a similar format and “show” the model how you want it to respond.

Second, the examples should follow patterns the model likely saw during training. For example, "Q:" typically precedes a question, and "A:" typically precedes an answer. This can be harder to determine, especially with larger closed-source models.

The prompt below uses a consistent format: Feedback // sentiment. Additionally, the first letter of the sentiment is always capitalized.

Good prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

The product works great! // Positive

The product doesn’t work for our use case // Negative

The product is alright I guess // Neutral

The product doesn't satisfy our requirements //

The prompt below is poor because it lacks consistent formatting for the separator between feedback and sentiment. The capitalization is inconsistent, and the sentiments themselves vary—sometimes it's just a single word, while other times it's a full sentence.

Bad prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

The product works great! - positive

The product doesn’t work for our use case = bad

The product is alright I guess → I Guess neutral

The product doesn't satisfy our requirements //

4. Order matters

Example order matters in two ways:

The order of the examples, regardless of their complexity.

The order of examples in relation to how complex and relevant they are.

The data on this is somewhat mixed, but in a paper from as far back as 2021, researchers found significant variance when testing different orderings of examples.

Some papers have found that performance increases when ordering examples from simple to complex, while others suggest placing the most relevant example at the end to leverage the model’s bias to give more weight to the last tokens it sees (see more on this here: A Survey on In-context Learning).

Either way, my takeaway is that once you have a set of examples, it's worth testing them in different orders.

5. Avoid example clustering

This one come directly from the paper: The Prompt Report: A Systematic Survey of Prompting Techniques.

It's best to stagger your labeled examples rather than cluster them. One reason for this is the model’s bias to give more weight to the last tokens it sees. If your last 4 examples are all of the same type, this could introduce bias.

In the prompt below, the labels are not clustered.

Good prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

The product works great! // Positive

The product doesn’t work for our use case // Negative

The product is alright I guess // Neutral

The product doesn't satisfy our requirements //

In the prompt below, the labels are clustered, with three out of the four labels being “Negative” and occurring in a row.

Bad prompt example

Your job is to classify sentiment of product reviews. Classify the following review:

The product works great! // Positive

The product doesn’t work for our use case // Negative

The product is not good // Negative

The product doesn't satisfy our requirements // Negative The product has helped streamline our workflow //

6. Don’t use too many examples

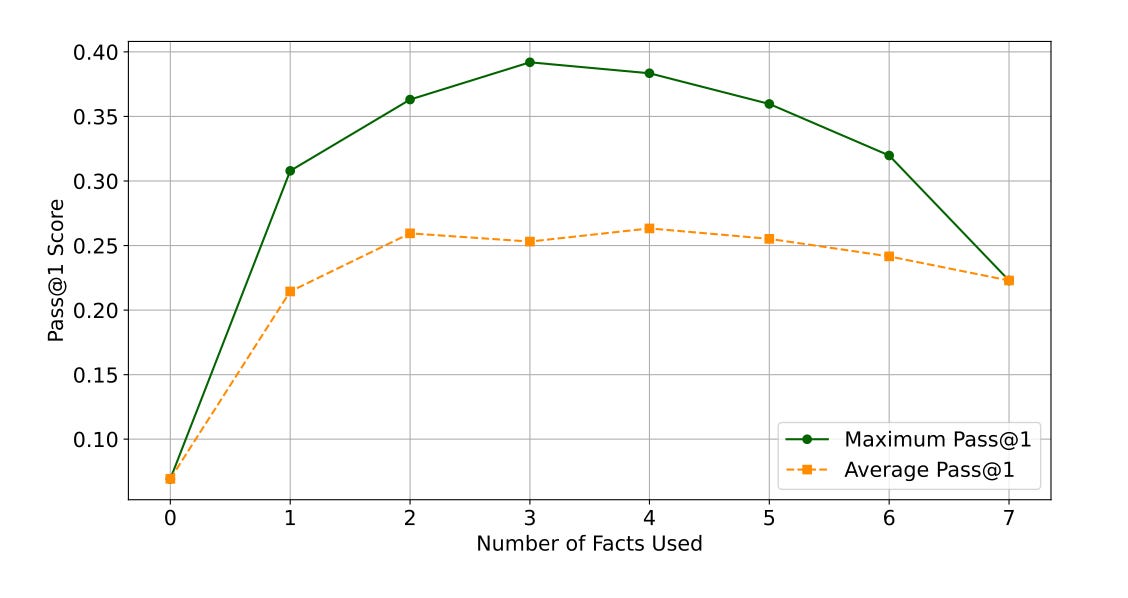

Performance eventually plateaus as you reach a certain number of examples, and it can even begin to degrade. When does this happen? Various papers suggest different answers, but here are a few examples:

The main takeaway I see here, and that I pass along to others, is that the majority of the gains come from including just a few examples. Start with 2-3 and see how things change. If you want to add more, try to keep the total under 8.

That’s it for the best practices! For more info on in-context learning, templates, and examples, you can check out our full guide here.

2 other papers I’m reading this week

DO LLMS 'KNOW' INTERNALLY WHEN THEY FOLLOW INSTRUCTIONS?

This paper looks into how LLMs process and follow user-provided instructions (prompts). The researchers identify a specific dimension within the models' input embedding space linked to instruction-following success.

By focusing in and modifying representations along this dimension, they show that they can improve prompts without sacrificing output quality.

They also did a study where they tested the phrasing of the prompt to see how it impacted the model’s adherence to the instructions. These phrase style experiments are always really interesting to dive into as they tackle how literally simple changes to wording can impact output quality.

Paraphrase Types Elicit Prompt Engineering Capabilities

This paper also dives into how paraphrasing and changing the vocabulary used in prompts can change performance.

The researchers broke down paraphrase types into six categories (e.g., morphology, syntax, and lexicon) and analyzed how changing different parts of the prompt would impact performance. Adjusting seemingly minor things like the lexicon lead to noticeable gains—up to 6.7% for certain models.

It’s still pretty crazy to see how just minor tweaks can lead to such a huge performance boost! This just reinforces how powerful prompt engineering can be.

Prompt template of the week

LLMCompare is a method to evaluate two article summaries. It can easily be adapted to handle any type of content. Access the prompt template here.

You can read more about LLMCompare and other evaluation methods in this paper here.

Happy prompting!

to help prompt engineering i develop a free huggingface space

https://huggingface.co/spaces/baconnier/prompt-plus-plus