In the same way that LLMs make great copilots for coding or writing, they can also be extremely helpful when it comes to prompt engineering.

This process of using LLMs to help write prompts is called meta prompting. Meta prompting is when you use LLMs to help you generate and refine prompts. Rather than writing every character of your prompt from scratch, meta prompting can help you get to a first iteration very quickly.

In this post we’ll look at some of the popular meta-prompting methods from the latest research, as well as a few tools that make it easy to get started with meta prompting.

Prefer video and want to help me become YouTube famous?

Tools for Meta Prompting

Before we get into methodologies and frameworks here are a few tools you can use to generate high quality prompts, quickly. For all of these tools, I like to think of the first prompt generation as a rough draft. From there you can test, iterate, and refine.

PromptHub’s Prompt Generator

We recently launched our prompt generator tool at PromptHub. It combines all the insights and techniques from our blog into one easy-to-use, free tool. Now you can create high-quality prompts tailored to your needs in just a few steps.

Model-specific prompts: Adjusts based on your model provider

Prompt engineering best practices by default: Just describe your task, and the tool takes care of the rest using prompt engineering principles

Free: Yes, it’s free to use!

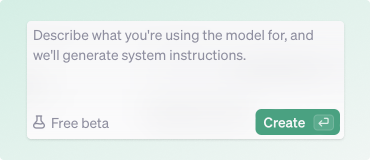

Anthropic's Prompt Generator

Optimized specifically for Anthropic models, this prompt generator is fast and easy to access via Anthropic’s dev console.

Anthropic has long been a leader in prompt engineering, and their generator is just another example of their commitment to the skill of prompt engineering.

OpenAI's Prompt Generator

Available in the OpenAI Playground, this tool generates system instruction prompts based on your task description. It works exclusively with OpenAI models, though it currently doesn’t support the new O1 models, which lack system instruction capability

We also did a little bit of prompt injecting and were able to reveal what we believe to be the meta prompt behind OpenAI’s generator. You can check it out via this template in PromptHub here.

Now we’ll run through some of the most recent and most popular meta prompting frameworks coming out of research labs. We’ll keep it relatively high level. For more detail, including example implementations and templates, feel free to check out our full meta prompting guide here.

Meta-Prompting

This first method comes to us from a collaboration between OpenAI and Stanford. This method, aptly called Meta-Prompting, leverages a "meta-conductor" to manage multiple expert LLMs to handle complex tasks by breaking them into smaller, specialized steps. This method reminds me of Multi-persona prompting a little.

How It Works:

It all starts with the conductor meta prompt (see below), which outlines how to break down instructions and leverage other LLMs

Based on the task, the meta-conductor generates prompts for expert models.

Each expert model completes a subtask based on tailored instructions.

The meta-conductor integrates the outputs, refining and verifying responses through a feedback loop.

Adjust and iterate until the final output meets requirements.

We created a template based on the system instructions used in the paper that you can test and add to your library in PromptHub.

Learning from Contrastive Prompts (LCP)

LCP uses contrastive learning to learn from both good and bad prompts, helping the model identify patterns and generate more well-rounded prompts.

How It Works:

Based on a task, multiple prompt candidates are generated

The model generates outputs for the various prompts

The model identifies where prompts succeed or fail based on predefined success metrics.

Contrast good vs. bad prompts, summarizing the common characteristics of each.

Generate new, refined prompts based on these findings.

Iterate through the process until optimal performance is achieved.

Automatic Prompt Engineer (APE)

Here is how APE works in a nutshell: Generate a bunch of prompts, score and evaluate them based on a quantitive scoring function, generate new semantically similar versions and select the prompt version with the highest score.

How It Works:

Use input-output pairs to generate an initial set of prompt variants.

Evaluate each candidate prompt based on output performance, using a quantitative measure

Rank and compare the prompts, identifying those that perform best.

Generate new, semantically similar, variations of the top-performing prompts.

Refine, iterate, and select the prompt variant with the highest score

PromptAgent

PromptAgent treats prompt generation and optimization as a planning problem, and focuses on trying to integrate subject matter expert (SME)/expert-level knowledge into the process to refine prompts iteratively.

How It Works:

Start with an initial prompt and target task.

Generate outputs and evaluate their quality.

Incorporate feedback from subject matter experts (SMEs) into the refinement process.

Grow the prompt space in a tree structure, prioritizing high-reward paths.

Continue refining prompts based on feedback loops and expert insights.

Conversational Prompt Engineering (CPE)

Potentially my favorite meta prompting method, CPE is a chat interface that helps users refine and create prompts through a conversation.

How It Works:

Select the target model and upload a file with input examples.

User and CPE engage in a back-and-forth chat to answer data-driven questions about output preferences.

Refine the initial prompt based on user feedback.

Generate outputs using the target model.

Review and adjust until all outputs are approved.

CPE generates a final few-shot prompt with approved examples.

DSPy

DSPy is a programmatic framework that allows users to create, optimize, and manage complex pipelines of LLM calls by treating LLMs as modular components in a structured workflow.

How It Works:

Define DSPy modules with specific tasks (e.g., creative writing, sentiment analysis) and their input-output signatures.

Construct sequences of LLM calls for step-by-step processing.

Refine outputs with ChainOfThought modules, leveraging user feedback and scoring metrics.

Evaluate and adjust prompts with Teleprompter modules to improve quality iteratively.

In DSPy, LLMs serve variety of roles, from generating initial prompts to evaluating outputs, refining those prompts, and learning from user feedback.

TEXTGRAD: Textual Gradient-Based Optimization

TEXTGRAD builds on DSPy’s framework with a focus on "textual gradients" — natural language feedback, from a user or an LLM, that is key to improving the prompt.

How It Works:

Start with a base version of the prompt.

A second LLM (or human) evaluates the output, providing detailed natural language feedback ("textual gradient").

Feedback and the original prompt are sent to another LLM to generate an improved version.

Repeat this iterative process until the desired output quality is achieved.

Wrapping up

Phew - that’s a lot of info on meta-prompting. For more details, specifically implementation examples and templates, feel free to check out our full guide here.