LLMs get lost in multi-turn conversations after just 2 messages

How you can make sure your agents don't get lost

If you’ve ever had a conversation with ChatGPT and felt like it misremembered something you mentioned earlier, you’re not alone and we now have some research to back it up.

Researchers from Microsoft and Salesforce just released a paper, LLMS GET LOST IN MULTI-TURN CONVERSATION, that tested how LLMs perform in two situations:

When all the context for a task are included in a single prompt upfront

When the context needs to be retrieved over a multi-turn conversation

Accuracy dropped by ~40% across the board when the context was given in multiple messages, 40%!!! This includes for “smart” models like Gemini 2.5 Pro, and Claude 3.7 Sonnet.

Why this happens, what you can do about it, and more below. Let’s dive in!

How they set up the experiment

Most benchmarks test LLMs with single-shot prompts that are pretty straight forward, for example:

Jay is making snowballs to prepare for a snowball fight with his sister. He can build 20 snowballs in an hour, but 2 melt every 15 minutes. How long will it take before he has 60 snowballs?

But a lot of LLM applications today, like ChatGPT, are more conversational, where a user discloses information piece by piece.

So the researchers sliced up prompts from popular datasets into shards. Each shard reveled some information about the task.

You’ll notice the shards above aren’t an exact match to the words used in the original prompt, that is because the researchers had a process for creating the shards.

Segmentation: LLM splits the full prompt into non-overlapping segments.

Rephrasing: Segments are reordered and reworded into conversational “shards.”

Verification: Automated side-by-side tests ensure no information is lost.

Manual Review: Authors perform a final inspection and edits.

At each turn, the model receives one shard, responds, and may answer or ask for clarification.

More experiment details

Tasks & Models: Six task types (code, SQL, math, actions, data-to-text, long-doc summarization) across 15+ LLMs

Scale: 200,000+ synthetic conversations

Metrics:

Performance (P): Overall accuracy

Aptitude: 90th-percentile score

Unreliability (U₉₀₋₁₀): 90th–10th percentile gap

Reliability (R): 100 – U₉₀₋₁₀ (consistency)

Methods tested

FULL: Single-turn prompt with the complete instruction, exactly from the dataset

SHARDED: Instruction split into shards, revealed one per turn.

CONCAT: All shards concatenated into one prompt (not multi-turn)

RECAP: SHARDED plus one final turn that repeats all previous shards.

SNOWBALL: SHARDED with all prior shards prepended at each turn.

Experiment results

SHARDED: ~39% average accuracy drop.

CONCAT: Recovers to ~95% of FULL, showing sharding itself doesn’t lose information, but rather the back and forth between user and LLM

All model types affected: Small models, large models, and reasoning models all degrade similarly

The researchers then tested the RECAP and SNOWBALL methods on GPT-4o and GPT-4o-mini. See table above if you want a reminder on how these methods work.

It is the same story for RECAP and SNOWBALL: Both outperform SHARDED, but fall short of FULL and CONCAT.

Why models fail at multi-turn conversations

There are 4 reasons why the models fail during these multi-turn conversations.

1. Premature Answer Attempts

Models that answer in the first 20% of turns average 30.9% accuracy.

Waiting until the last 20% boosts accuracy to 64.4%, as more information reduces early mistakes.

2. Verbosity Inflation (Answer Bloat)

LLMs layer on incorrect assumptions from early shards and never invalidate them.

Final responses bloat—e.g., code outputs jump from ~700 to 1,400 chars—packing in more errors.

3. Lost-in-Middle Turns

Models strongly favor content revealed in the first and last shards, often ignoring middle information.

In sharded summaries, citations for mid-conversation documents drop below 20%, mirroring long-context “middle blindness.”

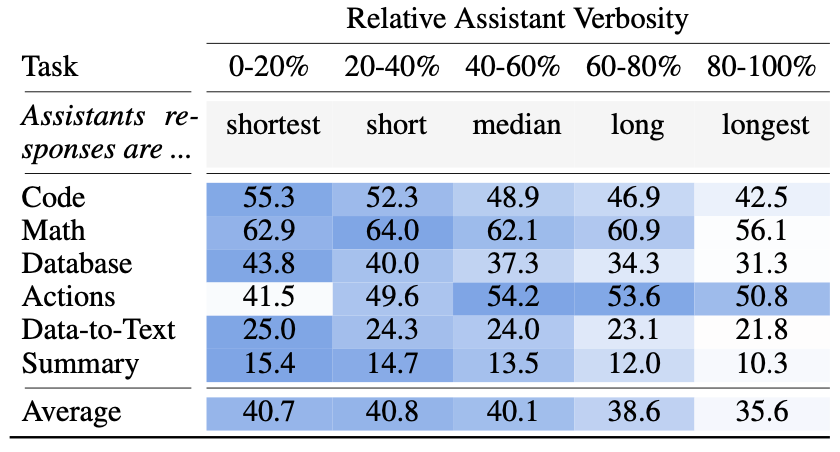

4. Over-Verbosity Harms Performance

Across five of six tasks, shortest responses outperform the longest by 10–50%.

Longer outputs introduce more assumptions and derail accuracy, as well as slow down users.

Advice for builders

Two main things you can do to help avoid the downsides associated with LLMs struggling with multi-turn conversations:

Test multi-turn flows: Explicitly include multi-turn scenarios in your test cases and evaluation suites.

Consolidate before generation: Whean you’re ready to generate an output, batch all collected user context into one prompt and send it as a fresh LLM call instead of continuing to drip information.