How to actually use Chain-of-Thought prompting

Templates, examples, and videos

Before we get into everything, for those who prefer hearing my blab versus reading my writing, here’s a video covering everything I’m about to say

Chain-of-Thought prompting has always felt like something that was commonly talked about, without ever really getting into the specifics of what it actually is. This is partially because unlike other prompting methods (like chain-of-density), there are a variety of ways to implement CoT.

So today we’ll go over some of the most popular (and effective) ways to implement CoT.

“Think step-by-step” - Zero shot chain of thought

Perhaps the most popular, and the easiest implementation of CoT is by adding a phrase to your prompt that will instruct the model to break down the problem into smaller sub steps.

It’s important to note that the instructions you include to promote reasoning might vary based on the model. For example, researchers ran a variety of tests to figure out what the top chain of thought instruction was for a variety of models (source: Large Language Models As Optimizers).

The results are below

Look at just how different the instructions are for GPT-4 compared to PaLM 2!

A friendly reminder that one size does not fit all when it comes to prompt engineering.

Using examples - few shot chain of thought

Few shot prompting is when you include examples in your prompt to help show the model what outputs should look like.

Few shot prompting is an easy way to improve prompt performance across any type of task, including reasoning tasks. The examples in this case should include the reasoning steps themselves. Below are a few examples from the original chain of thought paper.

Think abstractly, then solve the problem

Step-Back prompting instructs the model to think more abstractly before jumping into answering the question. This enables the model to do some form of reasoning while streaming an output.

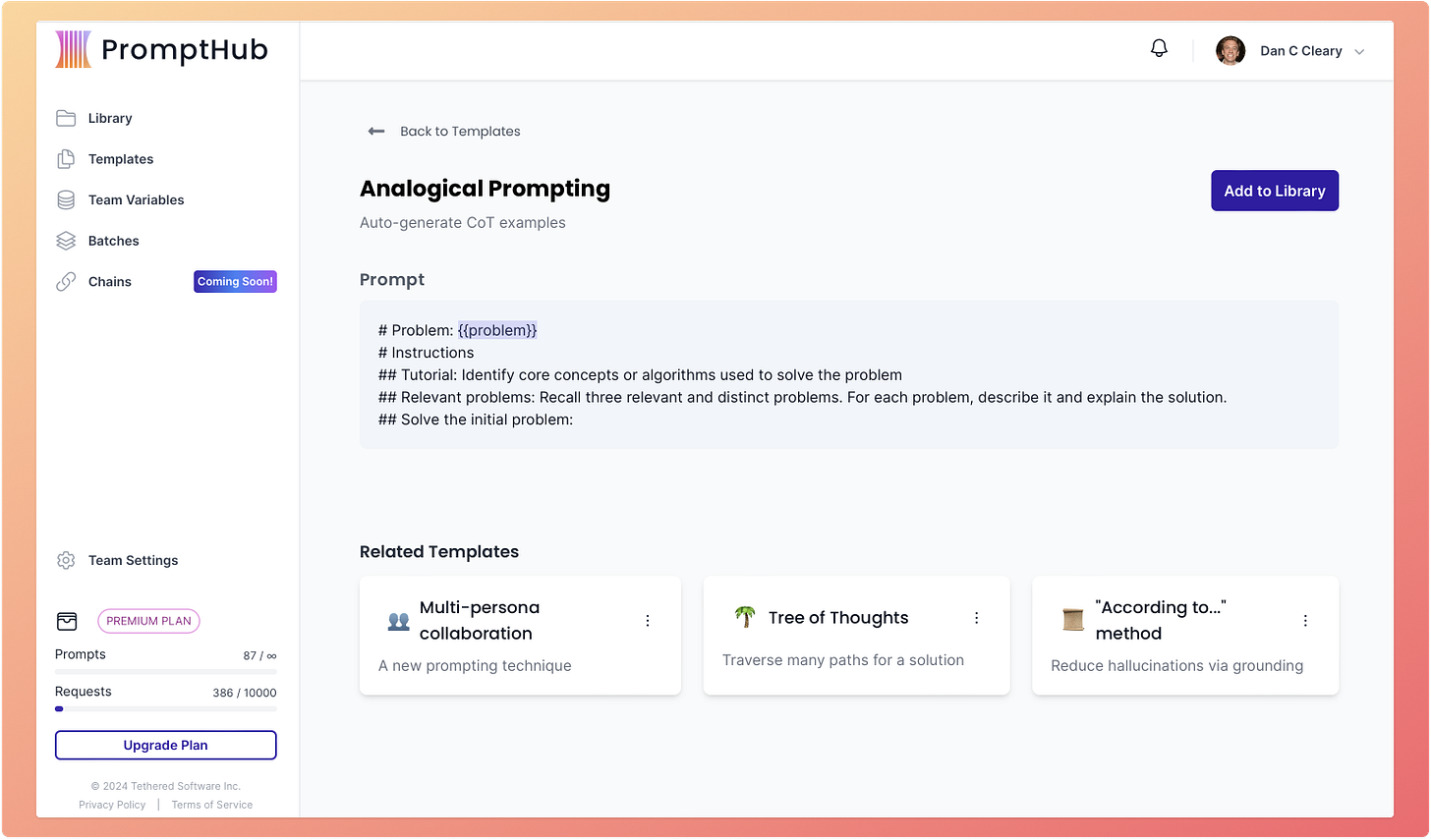

Analogical prompting

Analogical prompting helps solve the problem of “I want to use few-shot chain of thought prompting but I don’t want to spend the time writing out examples”).

It leverages the model to essentially generate the examples and reasoning chains before solving the problem at hand.

This differs from a typical few-shot approach in that the examples are in the output rather than the prompt. This will lead to higher costs and latencies, but could potentially increase reasoning since everything is happening in one streamed output.

We covered the rest of the methods in our longer post on the PromptHub blog here if you want to see more examples and templates!