How prompt engineering differs with reasoning versus non-reasoning models

Since OpenAI released their reasoning models, o1-mini and o1-preview, back in September, a common question has been “when should I use a reasoning model versus a non-reasoning model?”.

Additionally, one question downstream from that is, “Should my prompts be different?If so in what way?”

We’ve spent a lot of time testing things out, and there has been a recent flurry of papers that have given some great data around both of these questions.

We’ll answer both of those initial questions and more.

If you’re more of a video type of person, here is the video version, that goes into some depth.

The full-length post is also available on our blog here: Prompt Engineering with Reasoning Models.

How prompt engineering differs with reasoning models

First we’ll dive into how prompt engineering differs when using a reasoning model. We’ll start by leveraging the data and information in a recent paper: Do Advanced Language Models Eliminate the Need for Prompt Engineering in Software Engineering?

In this paper, the researchers tested o1-mini and GPT-4o across three code related tasks: Generation, translation, and summarization.

Overall here are the important things to note:

Overall the prompt engineering methods barely impacted o1-mini’s performance and sometimes they decreased performance

The prompt engineering methods boosted performance for GPT-4o more so than o1-mini

With some prompt engineering GPT-4o was able to achieve o1-mini level of performance

The next paper we’ll take a look at comes from Microsoft: From Medprompt to o1: Exploration of Run-Time Strategies for Medical Challenge Problems and Beyond

This is an update to a previous paper (that we covered last year).

Essentially, the researchers developed a prompt engineering framework, called Medprompt. GPT-4 + Medprompt was able to outperform LLMs that were fine-tuned on medical information, across a variety of medical datasets.

The researchers updated the paper by testing the framework with o1-preview.

Below is a diagram showing the various parts of the Medprompt framework and its related performance boosts when using GPT-4.

Below are the results from the models tested on a variety of medical datasets.

As you can see, 0-shot prompting with o1-preview outperformed GPT-4o, even when it leveraged the Medprompt framework.

Next up the researchers isolated and tested how the different prompt engineering components affected performance when using o1-preview.

As you can see from the last two bar charts, few-shot prompting degraded performance!

OpenAI does warn about this in their documentation:

“Limit additional context in retrieval-augmented generation (RAG): When providing additional context or documents, include only the most relevant information to prevent the model from overcomplicating its response.”

I’m not sure that I would go as far to say that few-shot prompting ALWAYS degrades performance when using a reasoning model, but this is certainly something to keep an eye on.

Would including just 1-2 examples in the prompt strike a balance by enhancing output quality without overcomplicating the response?

One last interesting finding from the Medprompt paper is about the relationship between reasoning tokens and performance.

The researchers tested two prompt templates across the medical datasets:

A prompt that instructs the model to respond quickly (generate less reasoning tokens)

Quick Response:

Please answer the following question as quickly as possible. We have narrowed down the possibilities to four different answers. I am in an emergency, and speed is of utmost importance. It is more important to answer quickly than it is to analyze too carefully. Return just the answer as quickly as possible.

------

# QUESTION

{{question}}

# ANSWER CHOICES

{{answer choices}}

------

Please remember to answer quickly and succinctly. Time is of the essence!

A prompt that instructs the model to respond with more reasoning tokens

Extended Reasoning:

Please answer the following multiple choice question. Take your time and think as carefully and methodically about the problem as you need to. I am not in a rush for the best answer; I would like you to spend as much time as you need studying the problem. When you’re done, return only the answer.

------

# QUESTION

{{question}}

# ANSWER CHOICES

{{answer choices}}

------

Remember, think carefully and deliberately about the problem. Take as much time as you need. I will be very sad if you answer quickly and get it wrong.

Here were the results:

As you can see, there appears to be a positive correlation between the number of reasoning tokens and accuracy. This also aligns with what OpenAI noted in the release post about the reasoning models:

“We have found that the performance of o1 consistently improves with more reinforcement learning (train-time compute) and with more time spent thinking (test-time compute”

Putting all of that together, here are a few best practices:

Few-shot prompting with 5 examples can reduce performance of reasoning models by overcomplicating the response

Reasoning models perform best with straightforward, zero-shot prompts

For non-reasoning models, prompt engineering methods can increase performance levels to that of their reasoning counterparts

When should you use a reasoning model

Here is a very simple framework to help decide when you should choose a reasoning model, courtesy of the paper, Do Advanced Language Models Eliminate the Need for Prompt Engineering in Software Engineering?

It all depends on the number of reasoning steps:

For CoT tasks with five or more steps, o1-mini outperforms GPT-4o by 16.67% (code generation task).

For CoT tasks under five steps, o1-mini's advantage shrinks to 2.89%.

For simpler tasks with fewer than three steps, o1-mini underperformed GPT-4o in 24% of cases due to excessive reasoning.

So if the number of reasoning steps is 5 or greater, a reasoning model is the way to go.

How can you determine the number of reasoning steps? Plug the prompt into ChatGPT and see!

Two other things to keep in mind:

Reasoning models have a harder time adhering to structured output requirements

Reasoning models sometimes 'leak' reasoning steps into their output

Prompt template of the week

We wrote about how to optimize long prompts about a year ago. A lot of the data was pulled from this paper: Automatic Engineering of Long Prompts.

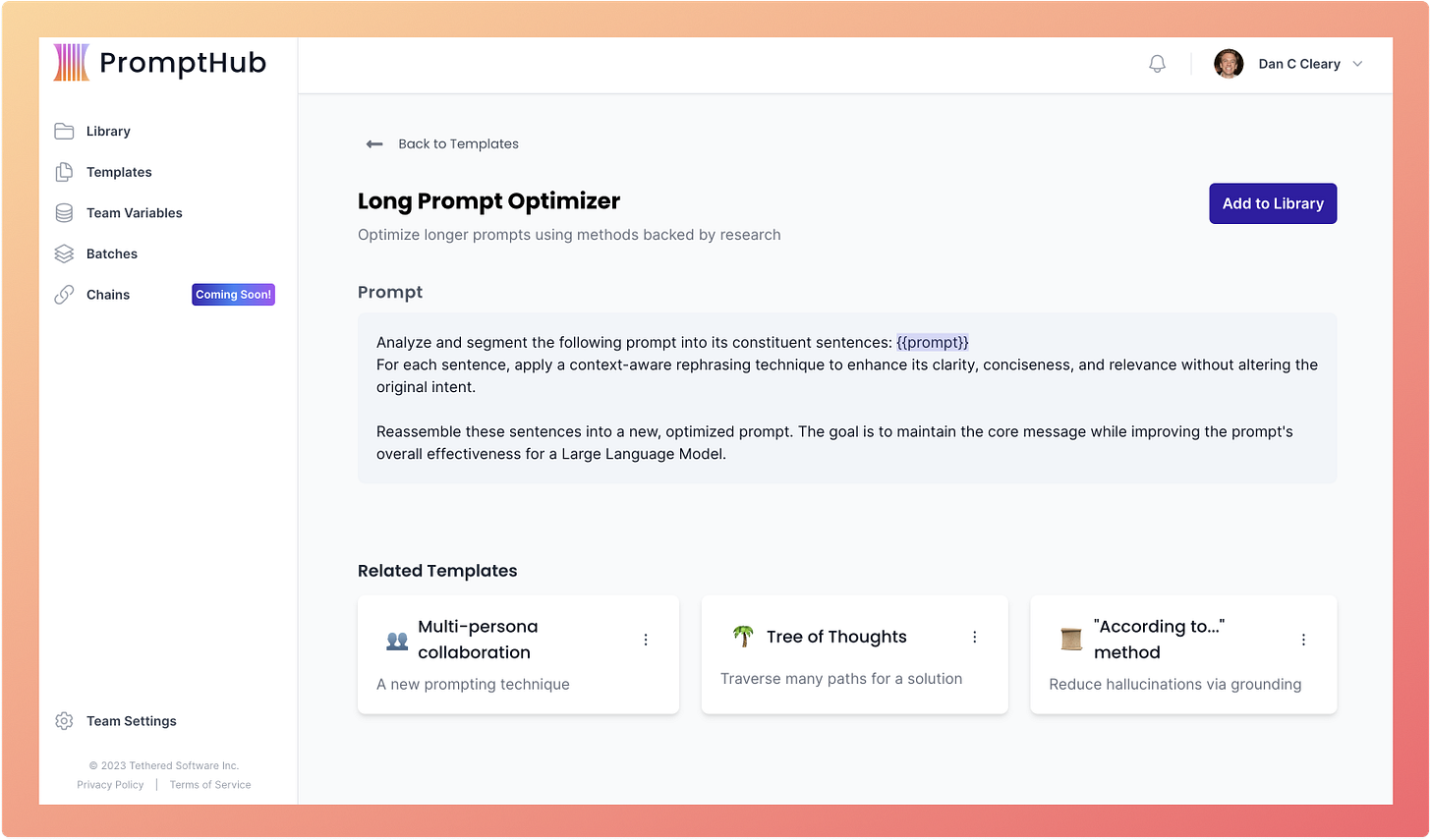

The researchers developed a framework to generate semantically similar prompts and test them until they achieved better outputs. Below is the single-shot template that I put together based on their framework.

That’s all for this week! I hope this gives you more tangible information when it comes to navigating reasoning versus non-reasoning models!