Recently, Google launched a new model, Gemini 2.5 Pro and it’s been making waves. I spent a lot of time messing around with the model last week in prep for the video I put out (please subscribe if you’re enjoying the videos, it motivates me to make ridiculous thumbnails)

I’ve continued to test it out, and have been blown away by it. Here’s everything you need to know.

The Big Picture: A Million-Token Context Window

The standout feature for me is the staggering 1-million token context window. It’s not the first Google model to have a 1-million token context window, but Google said it will also be increasing the context window to 2-million tokens in the near future.

For reference, the Great Gatsby is ~66k tokens. If you want to run your own needle-in-a-haystack experiments, you can use these prompts in PromptHub. They are all set up and ready to go.

Needle in a haystack prompt 1: Has 66k tokens in the prompt and a single needle

Needle in a haystack prompt 2: Has 200k tokens in the prompt and a single needle

Native PDF Support

Another nice feature of Gemini 2.5 Pro is the native PDF support. Working with parsers can be a huge nightmare when dealing with PDFs that have tables, charts, and other types of data.

Gemini 2.5 Pro can handle PDFs straight out of the box (a feature that Anthropic currently offers as well).

Coding example

One of the cooler parts of my testing was having Gemini 2.5 Pro create this cool little game below.

It’s a simple game, and the prompt was very simple, but the execution was flawless. The model not only generated code that worked smoothly, but it even handled subtle nuances like movement and collision detection gracefully. It’s the kind of fluid, responsive behavior that sets Gemini 2.5 Pro apart, especially when compared to other top models from other providers (see below).

Prompt: Make me a captivating space invaders style game, but a dog-style themed version. p5js scene, no HTML

For reference here’s the game that o3-mini-high made with the same exact prompt. It was the worst of the bunch.

Claude 3.7 Sonnet

Link to my thread on X where I give a little more detail about each game.

Pricing

Pricing for Gemini 2.5 Pro isn’t available yet, but it’s worth noting just how cheap models are getting, especially Google models.

While o3-mini costs:

$1.10 / 1M input tokens

$4.40 / 1M output tokens

And Claude 3.5 Sonnet costs:

$3.00 / 1M input tokens

$15.00 / 1M output tokens

Google 2.0 Flash costs:

$0.10 / 1M input tokens

$0.40 / 1M output tokens

Google 2.0 Flash is ~90% cheaper than o3-mini and 97% cheaper than Claude 3.5!!

WTF do we do with RAG

I’ve never been a huge believer in RAG. There certainly are situations where it makes sense (which I plan to cover in a future video), but it always felt like an over-engineered solution that people reached for too early in their LLM optimization process.

If you aren’t familiar with RAG here’s a 7-minute overview.

Traditionally, RAG systems rely on vector databases to stitch together context from chunked documents. But with two million tokens at your disposal, there’s a strong argument for a more “throw it all in the context window” approach. Why not load the whole context directly into the model and cache it to avoid huge latency/cost hits?

Sure, databases have their benefits—especially when dealing with rapidly updating data—but there’s something beautifully simple about “jamming” the context and letting the model work its magic. Early experiments suggest that this approach not only cuts down on latency but also simplifies the overall system architecture.

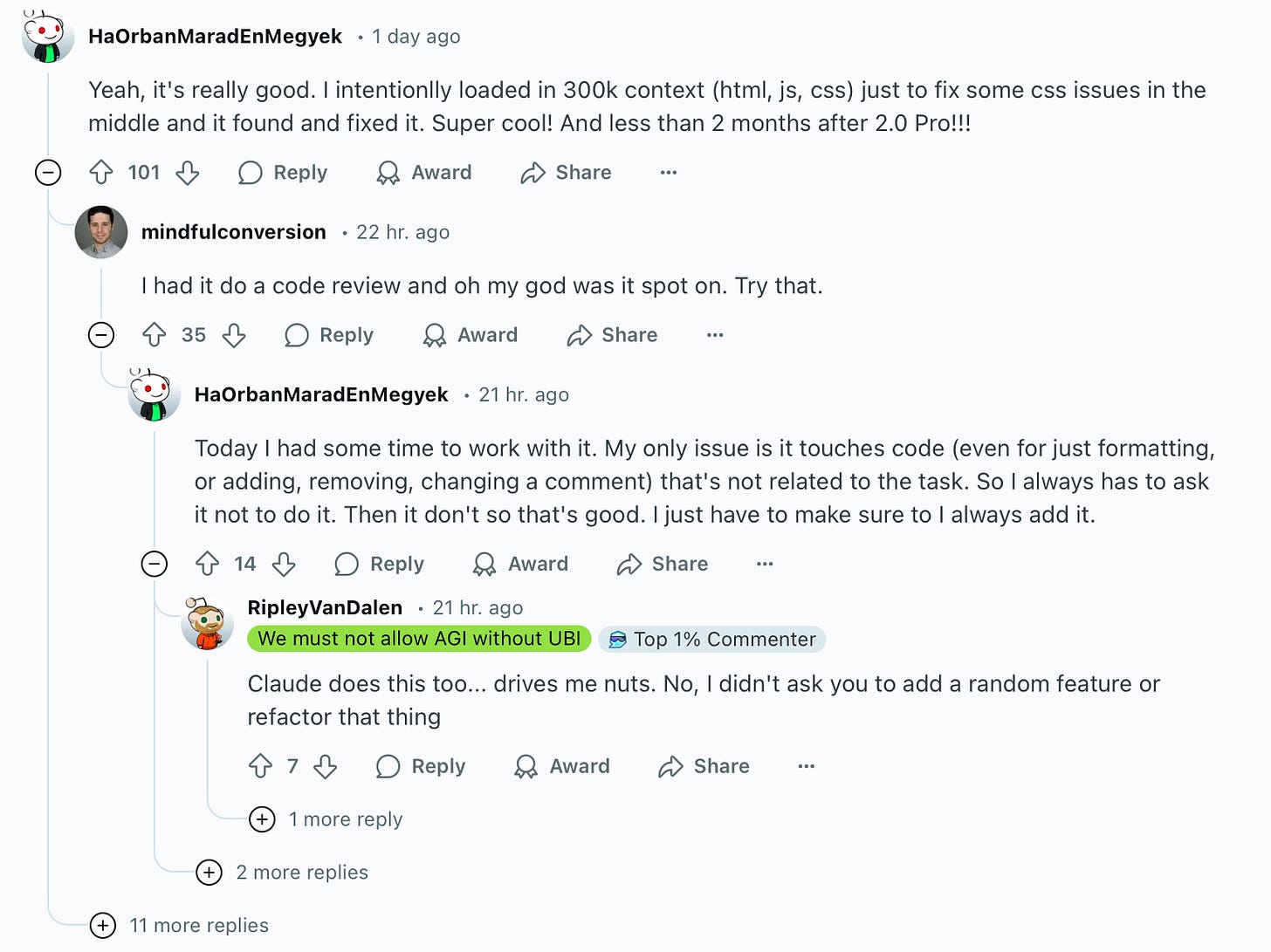

One concern is that while the model can support 2 million tokens in the context window, can it really handle it? Reddit seems to think so, and so do my initial needle in a haystack tests, but I plan to do more here!

Final Thoughts

Google’s Gemini 2.5 Pro is firing on all cylinders. From massive context windows and native PDF support to innovative demos like the game above, this model is awesome.