Everything you need to know about few shot learning

Few shot learning is the easiest way to get better outputs from LLMs. Find out how and when to use it, it's current limitations, and tons of examples.

When a team comes to us and says they are having some prompting issue, one of the first things I’ll look to see is if they’ve tried few shot learning.

Few shot learning is a technique where you include examples of how you want the model to respond, directly in your prompt itself.

I believe that this method might be the most efficient technique to get better outputs from LLMs. I’m not just saying in comparison to other prompt engineering methods, but I mean in relation to the various other ways to optimize outputs (RAG, fine-tuning, etc). The combination of how easy it is to implement with the immediate gains you’ll see is unmatched.

Let’s jump in.

What is few shot learning?

Few shot learning, also known as few shot prompting or in-context learning (ICL), is a prompt engineering method where you include examples in your prompt. This shows the model what you want the output to look/sound like.

Here’s a simple example where we are using a prompt to classify product feedback:

Few shot learning prompt example

The onboarding was smooth and informative // positive

Adding team members was challenging // negative

It was a little challenging to find how to start a new project // neutral

The API documentation was clear and helpful! //

Few shot versus zero shot

You may hear or read about “zero shot prompting” or “one shot prompting”. These methods refer to when you use either zero or one example, respectively, instead of a few.

Few shot learning examples

Let’s say you’re a marketing agency and you want to use AI to generate custom content for each of your clients. Few shot learning would be great to use in this example, as it is really helpful at guiding the LLM to create content in the correct tone and style.

Let's write a prompt to create content that mimics the client's tone, which is also reusable across all clients.

Few shot prompting example

Your job is to create content for our client, {{client_name}}.

Here are a few examples of content we've created in the past from previous project briefs, make sure:

"""

Project 1:

Brief: {{brief_1}}

Content: {{content_1}}

Project 2:

Brief: {{brief_2}}

Content: {{content_2}}

"""

Here is the latest brief to create content about:

"""

Brief: {{brief_description}}

Content:

In this prompt we are passing along previous project briefs and the content we generated from those briefs. This gives the model a clear idea of the tone, format, and style for this specific client and generally what we produce given a specific brief.

The examples are included as variables, so that we can swap out the data for different clients. We could even turn the prompt into a PromptHub form and share it with our team.

Few shot versus zero shot example

Let’s do a direct comparison to see just how much better few shot learning is compared to zero shot.

Let’s say we want to use an LLM to write a python function that calculates the factorial of a number. Here are the prompts we will use:

Zero shot prompt

Write a Python function to calculate the factorial of a number.

Few shot prompt

Here are some examples of Python functions. Following these examples, write a function to calculate the factorial of a number.

Example 1:

---

"""Add two numbers and return the result."""

if not (isinstance(a, (int, float)) and isinstance(b, (int, float))):

raise ValueError("Both inputs must be numbers.")

return a + b---

Example 2:

---

def subtract(a, b):

"""Subtract second number from first and return result."""

if not (isinstance(a, (int, float)) and isinstance(b, (int, float))):

raise ValueError("Both inputs must be numbers.")

return a - b---

Now let’s look at the outputs

Zero shot prompt output

Sure! Here is a Python function that calculates the factorial of a number:```

def factorial(num):

if num == 0:

return 1

else:

return num * factorial(num - 1)

# Test the function

number = 5

result = factorial(number)

print(f"The factorial of {number} is {result}")

```

You can call the `factorial` function with any number as an argument to calculate its factorial.

Few shot prompt output

Here is an example of a Python function to calculate the factorial of a number, including a check for non-negative input:```

def factorial(n):

"""Calculate the factorial of a non-negative integer n."""

if not isinstance(n, int) or n < 0:

raise ValueError("Input must be a non-negative integer.")

if n == 0:

return 1

else:

result = 1

for i in range(1, n + 1):

result *= i

return result

```

This function takes a non-negative integer `n` as input and calculates its factorial. It first checks if the input is a non-negative integer and raises a `ValueError` if it is not. Then, it calculates the factorial using a loop and returns the result.

Here are the main differences:

The zero shot prompt doesn't account for negative numbers

The few shot prompt includes validation checks and error messages for negative inputs. It also has a nice description included outside of the code block

Overall, the prompt using few shot learning produced a more accurate output, that was more descriptive, and offers better maintainability.

Few shot learning with multiple prompts

So far the examples we’ve looked at have been single prompts, with multiple examples included.

In our first example we sent input + output pairs of product feedback. In our second example, we included input + output pairs for content creation. In both cases we were showing and signaling to the LLM “if this was the input, here is what the output should be”.

The other way to implement few shot learning is by using multiple prompts and messages. It will look similar to our first two examples, but rather than include the inputs and outputs in one prompt, we will separate them. We’ll use PromptHub’s chat testing feature to do this.

We are sending multiple messages all at once. It is like we are pre-baking a conversation with the proper context so then we will get a very tailored output, because the LLM knows what we are looking for.

So when should you use each method? It comes down to your situation and what resources you have available. Overall implementing a multiple message setup like above is relatively straight forward using PromptHub’s chat testing feature but does require a little bit of an extra lift.

Use multiple messages when:

Simulating a back and forth interaction (chatbot): The model needs to understand and respond within the flow of a conversation.

Contextual Continuity: The goal is to sustain a consistent and coherent narrative across multiple messages

Incremental Complexity: The use case benefits from gradually developing the context, where each message introduces an additional layer of complexity or subtlety, which might not be captured by a single prompt alone.

Use a single prompt when:

Streamlining Processing: The goal is efficiency, aiming for the model to handle examples and produce a response in one go

Lack of resources: If you don’t have access to the resources to leverage few shot learning via multiple messages

As always, I’d recommend testing both options out and seeing what the difference in output quality looks like. You can use PromptHub’s testing features to do this. You can send a single user prompt and compare it to a string of messages using our chat testing comparison tools.

Few shot learning best practices

How many examples should I include?

Multiple papers point to major gains after 2 examples and then performance flattens out.

How many examples should I include?

The order does seem to make a difference, but the magnitude of output performance varies based on the model. A strong reason for this is that LLMs tend to put more weight on the last piece of information they received. So there is a chance that the LLM will overfit to the last example, and your output will look a lot like it.

In a paper that is now a few years old, the researchers varied the order of the examples in a prompt being fed to GPT-3. The accuracy varied wildly. It's debatable whether the variance in accuracy is more due to the model's older, less sophisticated technology or the order of the examples themselves.

One strategy you could test is to put your ‘best’ example last in the order.

What comes first, instructions or examples?

Typically we see instructions and then examples because that is what flows more logically to us. But if it seems like the model is having a hard time ‘remembering’ or following the instructions then it would be worth it to try flipping the order.

Sometimes it might feel like the model gets ‘lost’ in the examples, that would be a good sign to flip the order.

If your task is really basic you could even remove the instructions altogether like we did for the feedback classifier in our first example.

Can I use an LLM to generate the examples?

Yes, give it a shot!

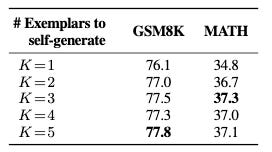

We did a deep dive on a research paper that ran several experiments in which the LLM was tasked to generate the in-context examples (full post here: Using Analogical Prompting to Generate In-Context Examples).

We also put together a template you can use for any problem or task to allow the model to generate your examples.

When should you use few shot learning?

Few shot learning can be applied to most situations which is great. If you want your outputs to better follow a certain structure, tone, or style, few shot learning is going to be a good option to test.

Here are a few more specific situations

Technical domains: In technical fields where gathering a lot of data could be difficult, few shot prompting is a good technique to generate high-quality, domain-specific outputs without the need for large datasets

Matching tone (content generation): A big problem about using LLMs for content generation is that the text often ‘sounds like AI’. Sending even just two examples via few shot learning can make the outputs sound much more human-like and on-brand.

Strict Output Requirements: Few shot prompting is great for showing the model the shape of the output you would like (bullets, markdown, JSON, conversational etc)

Current limitations and challenges

Few shot prompting is great, but does have its weaknesses, namely:

Overfitting: The model may overfit the output to mimic the examples in a way that isn’t desired

Garbage in, garbage out: Few shot learning depends heavily on having high-quality and diverse examples to learn from

Majority label bias: The model may favor answers that are more frequent in your prompt + examples. In regard to our product feedback classifier, the model may be skewed to predicting a positive sentiment if there are more positive examples in the prompt

Recency bias: As mentioned above, if you send the instructions and then the examples, the model may overemphasize the importance of the last example given

In Closing

Few shot learning may be the most efficient way to quickly get better outputs from LLMs. It requires no technical ability, can be set up fairly quickly, and you can even use LLMs and Analogical Prompting to help you generate high quality examples.

Hopefully this helps you, feel free to ask any questions below!