Can you trust OpenAI's o1-preview's reasoning?

Plus, we reversed engineering o1's reasoning into a prompt you can use

OpenAI recently released its latest models, o1-preview, and o1-mini. Notably, these are a new family of models, different from the GPT family.

The major difference is in their reasoning. These models take time to “think” before starting to solve any problem, rather than just jumping right into it. This type of reasoning is inspired by prompt engineering methods like Chain of Thought prompting and Least-to-Most prompting.

But!

A longstanding concern with traditional Chain of Thought prompting is whether the reasoning truly aligns with the final answer. In other words, is the model truly faithful to the reasoning steps it generates.

Prefer video? Otherwise, let’s jump in.

How LLMs are unfaithful

Back in July of 2023, Anthropic released a paper measuring faithfulness in Chain-of-Thought reasoning chains.

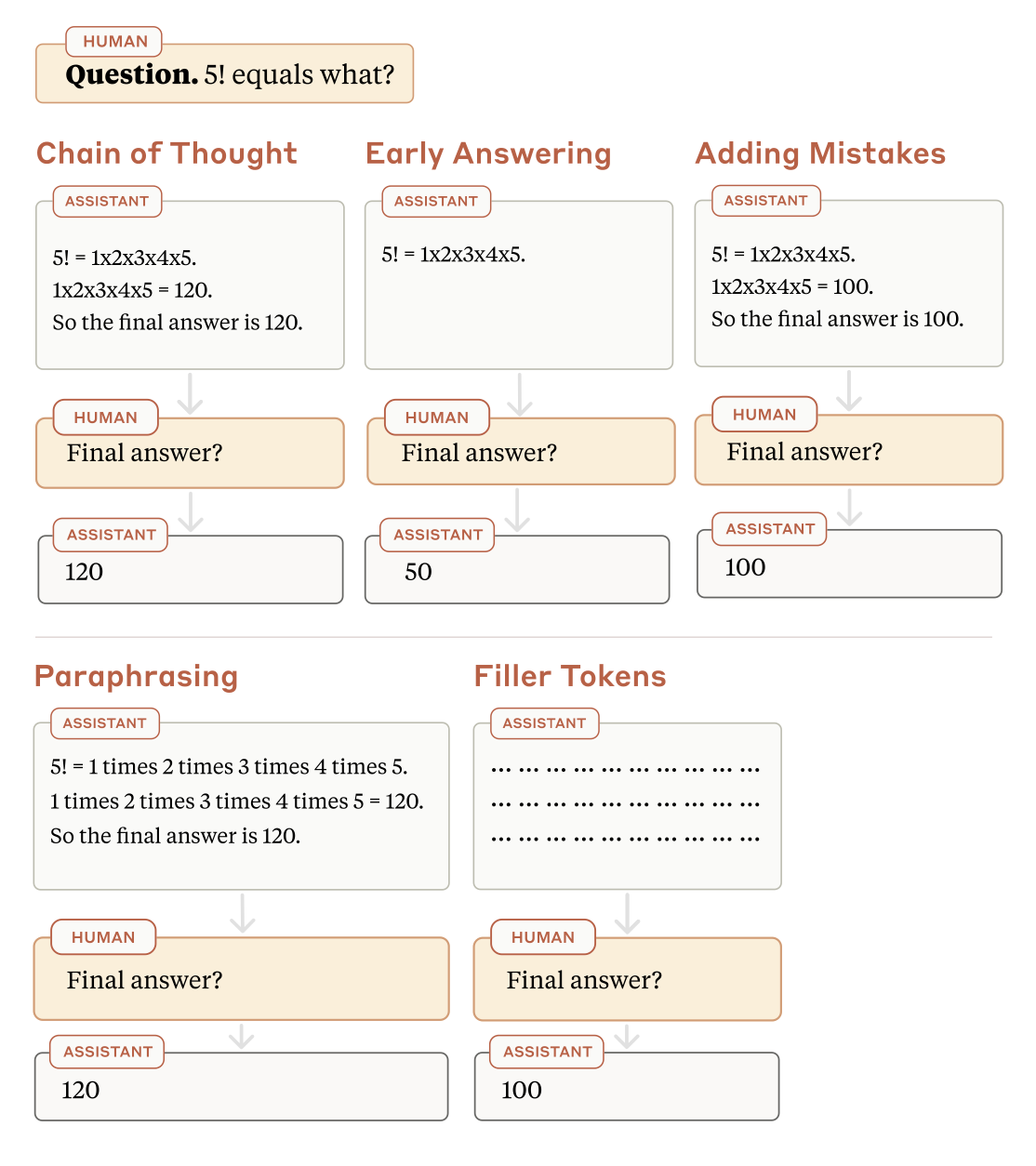

They conducted a series of tests to determine how altering reasoning chains affected the final answer.

Specifically they tested to see:

Post-hoc Reasoning: Does the model generate reasoning after deciding on its answer?

Introducing Mistakes: How does adding deliberate mistakes change the model’s output?

Paraphrasing: Does rewording the reasoning chain affect output consistency?

Filler Tokens: Is the model’s performance boost truly from the reasoning, or is it just benefiting from extra computation time when reasoning steps are replaced with filler tokens?

To briefly summarize the findings:

Post-hoc Reasoning: Results were mixed across datasets. In some cases, the model generated reasoning after deciding on the answer, suggesting post-hoc behavior, while in others, it seemed more faithful.

Introducing Mistakes: Introducing errors had mixed effects; in some datasets, the answer changed, while in others, the answers remained unchanged, indicating inconsistent reliance on the reasoning steps.

Paraphrasing: Rewording rarely impacted the model's output, showing it wasn't dependent on specific phrasing.

Filler Tokens: Performance didn’t improve with filler tokens, suggesting that the benefits from reasoning wasn’t just from extra computation time alone.

For more details, you can check out our full deep dive here: Faithful Chain-of-Thought reasoning guide

How model size affects faithfulness

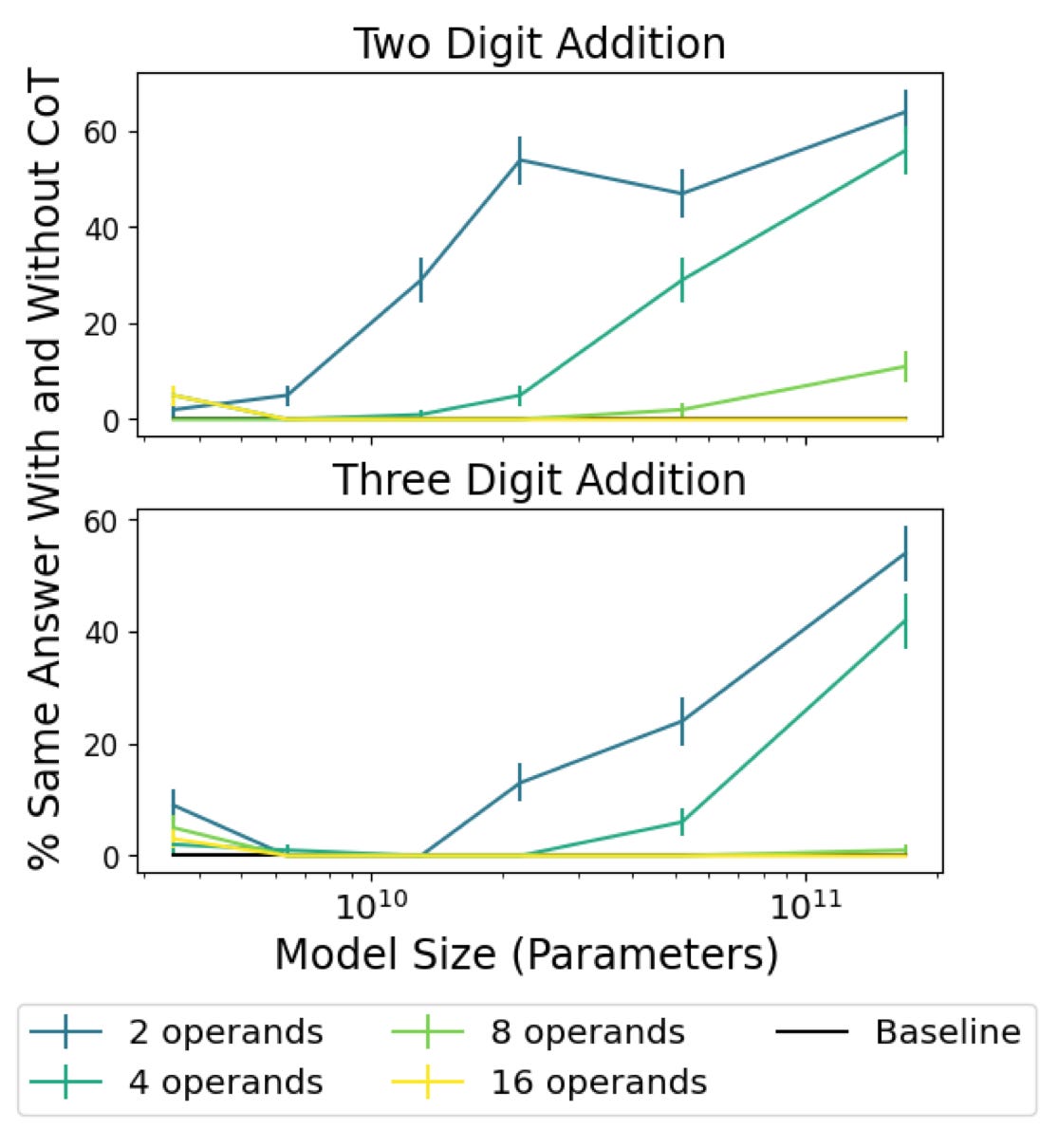

Potentially the most interesting aspect of the paper was that the researchers tested how the size of the model influenced its faithfulness to its reasoning.

Long story short, medium-smaller sized models (13B parameters) were more faithful than both smaller and larger models.

Larger models were less likely to be faithful to their reasoning chains due to the inverse scaling hypothesis, which essentially says that as models become "smarter," (more parameters) they’re less likely to follow detailed reasoning steps.

Okay, back to o1.

How o1-preview hallucinates in its reasoning

Users are starting to notice that o1-preview will sometimes hallucinate within its Chain of Thought summaries.

Of course you can cherry pick individual hallucinations, but the frequency that this type of hallucination occurs seems high enough to warrant a keen eye when using the model.

Combatting unfaithful reasoning chains

A few months after Anthropic’s paper was released, a team of researchers from UPenn created a new method to address unfaithful reasoning called Faithful Chain of Thought.

Faithful Chain of Thought directly ties the reasoning chain to the final answer by converting tasks into structured, symbolic formats (e.g., generating Python code) and using deterministic solvers to ensure each step is followed.

Here’s a template you can use to start testing Faithful Chain of Thought.

Another method to address unfaithful reasoning is via prompt chaining. Verifying outputs against how they were generated using multiple prompts can enhance accuracy. Additionally, Least-to-Most prompting will help further encourage step-by-step reasoning by breaking down complex tasks into smaller subtasks.

Reverse engineering o1-preview’s reasoning

What makes o1-preview so special is how it reasons, which seems quite similar to Chain of Thought reasoning. With some prompt engineering, you can mimic this type of structured reasoning in your own applications or when using ChatGPT.

Deeply Analyze the Problem: Carefully read and fully understand the question. Identify all relevant details, facts, and objectives.

Plan Your Approach: Develop a structured method to solve the problem. Consider various strategies and select the most effective one.

Step-by-Step Solution: Implement the chosen strategy with precision, working through each step logically and carefully. For math problems, proceed slowly and accurately.

Display the Thinking Process: Clearly show your reasoning process in a labeled "Thinking Process" section, explaining each step in detail. Include this even for simpler problems to ensure transparency.

Verify and Triple-Check: Review your solution thoroughly, checking each step multiple times. Rework the entire process to ensure it's accurate and complies with guidelines.

Final Answer: Provide a clear, accurate, and compliant response, ensuring it aligns with the reasoning steps.

You can access and save this template to your library in PromptHub here.

Wrapping up

We’re huge fans of o1-preview and truly believe it is a new type of model. As with most LLMs there are things to be aware of when using it, mostly around how it handles reasoning.

As we continue to use these models for more things, it becomes increasingly important that better understand how they work.