Anthropic's Model Context Protocol for dummies (I'm the dummy)

Plus, a free upcoming webinar

Before we jump into it, I’ll be running our second edition of PromptLab in March. A free, 3-day course on prompt engineering, agents, reasoning models, and a bunch of other fun stuff. Did I mention it’s free?

Alright, onto the good stuff.

Large Language Models are incredibly smart and capable, but configuring them with the access to the right data and the right tools is a huge challenge. This often involves a ton of custom integration work for teams, and the integrations themselves can often be brittle and only support certain functions and models.

Anthropic recently launched the Model Context Protocol to help address this problem. The MCP aims to make the integration of data, tools, and real-world applications seamless. It’s a beautiful idea: A universal, open standard that connects LLMs with the systems where your data lives.

Let’s dive in!

How MCP works and why it’s helpful

At its heart, the Model Context Protocol is all about simplification.

Today, even the most basic LLM deployments require custom connectors for each new data source—whether it’s a company database, Google Drive, or a specific app (Notion, Jira, etc.). This creates a classic “N×M” problem, where N data sources and M AI applications would normally demand a bespoke integration for every pairing.

MCP changes this by introducing a universal, client-server model. In this setup, the AI application (think of an AI-powered IDE like Bolt) acts as an MCP client, while each data source or service is wrapped by an MCP server—a lightweight adapter that speaks a single, standard interface.

What does this mean in practice?

Unified Integration: Instead of juggling separate APIs or plugins, developers only need to implement MCP once. Any MCP-compliant server—whether it’s for a Git repository, a Slack workspace, or a file system—can plug right into any MCP client.

Two-Way Communication: MCP isn’t just about reading data. The protocol enables controlled operations too, so an AI assistant can both fetch context (like documents, records, or code) and execute actions (such as running a query or posting a message, writing to a database). All of these interactions follow a uniform set of rules for authentication, data formatting, and messaging.

Scalability and Simplicity: By replacing a web of custom connectors with a single protocol, MCP makes it much easier to integrate new data sources. This means better AI systems, that are easier to build, more contextually aware, and can take actions seamlessly.

Wrapping up

Anthropic’s MCP is a great first step in creating a standard that simplifies the marriage between AI models and the data they need. If you’re a developer building with LLMs, check it out!

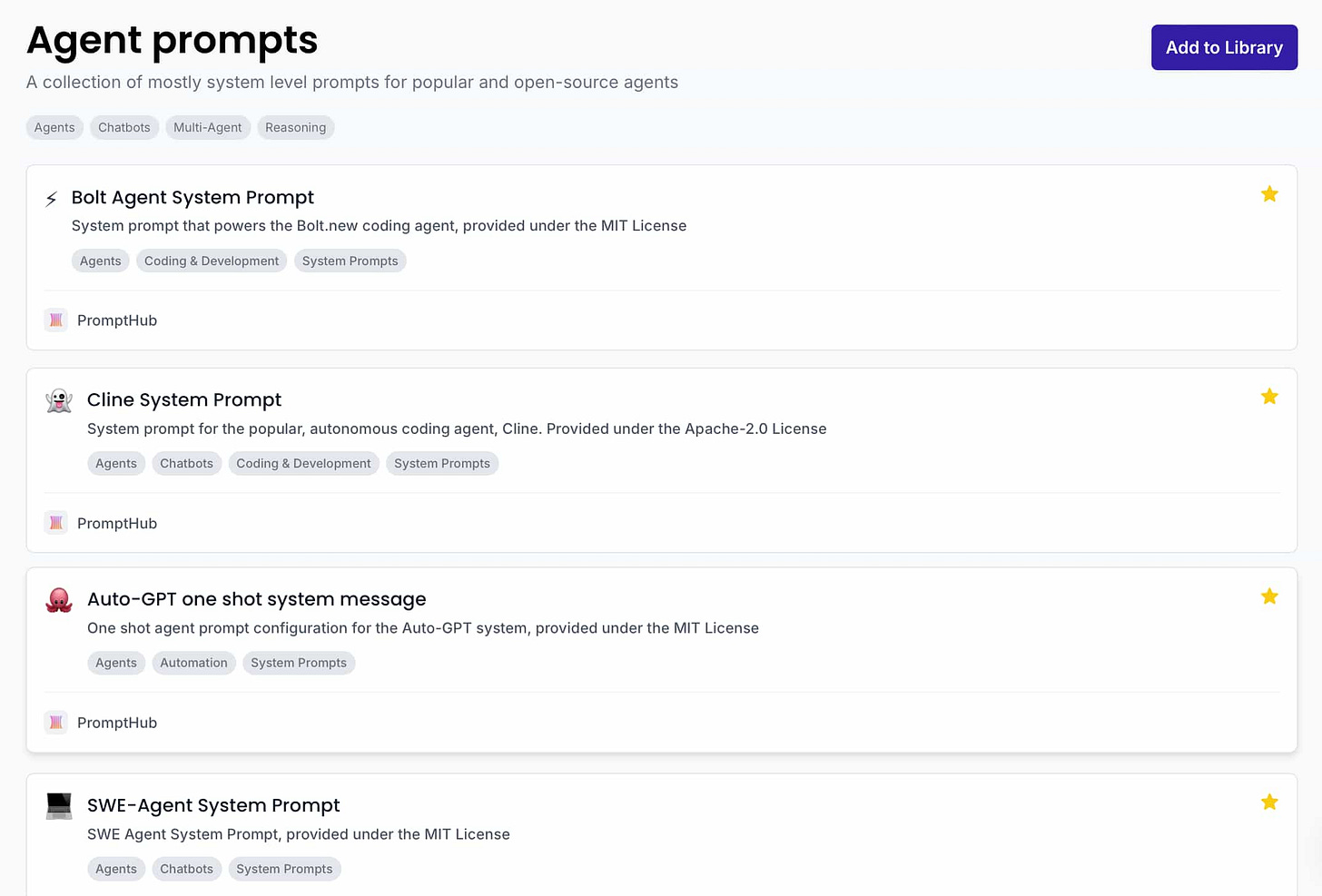

Prompt templates of the week

If you missed it last week, we just launched a collection of system prompts from popular open-source AI agents. If you’re building agents or will be, check it out!