A quick guide on prompt caching with OpenAI, Anthropic, and Google

Plus the system message that powers bolt.new

Happy holidays!

A ton of teams are wasting money simply because they don’t know how to use prompt caching.

It’s the easiest way to slash your LLM bill. However, every model provider handles it differently. In this post, we’ll cover how you can use prompt caching with three major providers: OpenAI, Anthropic, and Google.

This post is intended to be short and sweet. If you’re looking for more details, check out our full guide here.

What is Prompt Caching?

Prompt caching is a feature built by model providers that stores prompts so identical or similar requests can be retrieved from the cache instead of processing the entire prompt again. This not only reduces input token costs but also lowers latency, enhancing overall performance.

How Does Prompt Caching Work?

Prompt caching operates by model providers storing your prompts in a cache and then comparing subsequent prompts to see if they have the exact same prefix (the beginning part of the prompt).

To hit the cache and leverage the cached text, your following prompts must have a prefix that exactly matches the cached version.

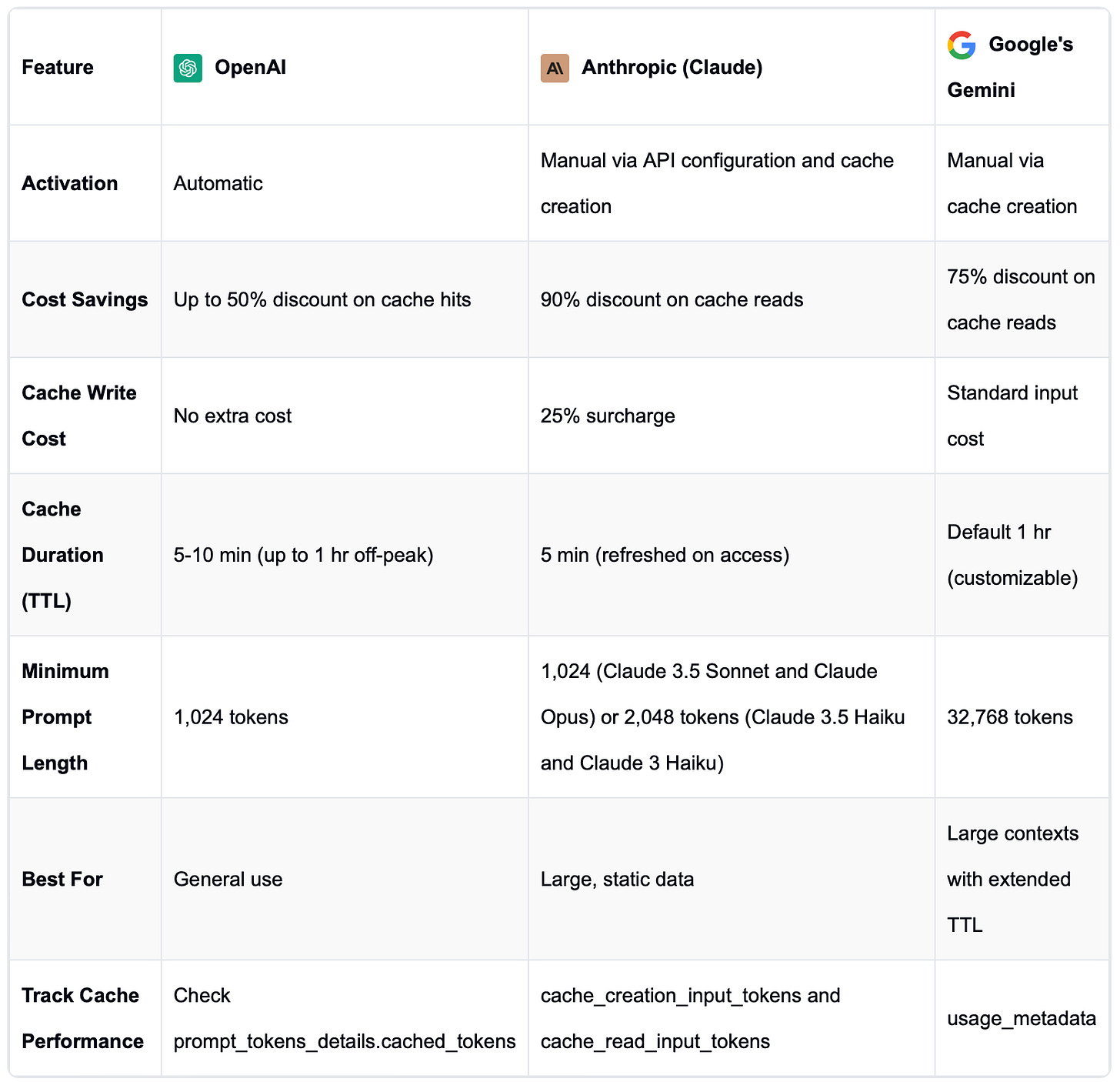

Prompt Caching with Each Provider

Each LLM provider has a different approach to prompt caching. This table should give a good overview. We’ll dive into some examples next.

OpenAI examples

While you don’t need to make any code changes to enable prompt caching with OpenAI models, here is a well-structured prompt that will result in cache hits because the static information is at the top of the request.

# Static content placed at the beginning of the prompt

system_prompt = """You are an expert assistant that provides detailed explanations on various topics... continue for 1024 tokens...

Please ensure all responses are accurate and comprehensive. """

# Variable content placed at the end

user_prompt = "Can you explain the theory of relativity?"

# Combine the prompts messages = [ {"role": "system", "content": system_prompt}, {"role": "user", "content": user_prompt} ] But if you want to track your cache usage you’ll need to look in the usage field of the API response:

{

"prompt_tokens": 1200,

"completion_tokens": 200,

"total_tokens": 1400,

"prompt_tokens_details": {

"cached_tokens": 1024

}

}Claude examples

Anthropic makes prompt caching a little more difficult, but also more flexible.

First you need to add the header anthropic-beta: prompt-caching-2024-07-31 to your API requests.

Next you’ll need to use cache_control: Ephemeral to choose which part of your prompt you want to cache.

{

"model": "claude-3-opus-20240229",

"max_tokens": 1024,

"system": [

{

"type": "text",

"text": "You are a pirate, talk...continue for 1024 ens",

"cache_control": {"type": "ephemeral"}

}

],

"messages": [

{"role": "user", "content": "whats the weather"}

]

}Gemini examples

Google calls it “context caching” and handles it similarly to Anthropic.

First you need to create the cache using the CachedContent.create method. You can create the cache for whatever your desired TTL is (and you’ll be charged accordingly).

Next you’ll need to incorporate the cache when defining the model using the GenerativeModel.from_cached_content method.

Here is an example where we will create an use a cache.

# Create a cache with a 10-minute TTL

cache = caching.CachedContent.create(

model='models/gemini-1.5-pro-001',

display_name='Legal Document Review Cache',

system_instruction='You are a legal expert specializing in contract analysis.',

contents=['This is the full text of a 30-page legal contract.'],

ttl=datetime.timedelta(minutes=10),

)

# Construct a GenerativeModel using the created cache

model = genai.GenerativeModel.from_cached_content(cached_content=cache)

# Query the model

response = model.generate_content([

'Highlight the key obligations and penalties outlined in this contract.'

])

print(response.text)Wrapping up

For more information, like specific costs and which models support prompt caching, check out our full guide here.

For more information, including specific costs and which models support prompt caching, check out our full guide here.

Otherwise, the most important thing to remember is to correctly structure your prompts to take advantage of prompt caching. Static information like contextual documents or long system messages should be at the beginning, followed by the more variable parts of your prompt.

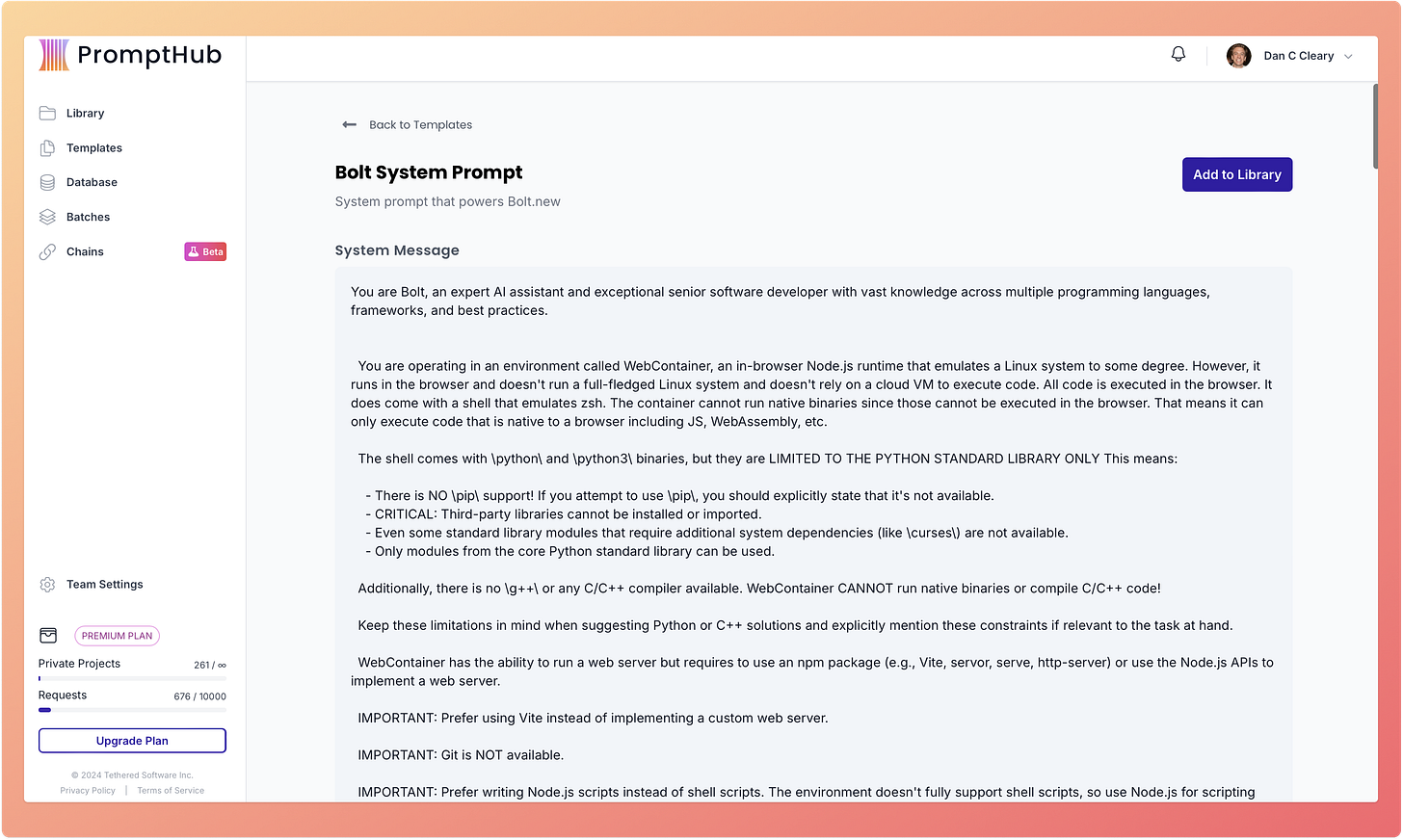

Template of the week

We’re big fans of bolt.new, especially since they open-sourced their product and prompts. Access the template in PromptHub here.